Background

I’ve been fascinated by high-volume, fault-tolerant data storage systems for a long time. I started my data storage setup in earnest with a 4-disk RAID 5 array on an ARC-1210 controller installed in my daily-driver Windows desktop. When that array inevitably filled up, I added another array on a second ARC-1210. That slowly filled up, too, and I knew I couldn’t just keep stuffing RAID cards in my desktop; I had to build a serious file storage server eventually.

I considered many different storage options and configurations, including a large hardware-controlled RAID system on a Windows or Linux environment, a software-controlled array in a Windows-based server, a Drobo-type “keep it simple, stupid” system, and continuing to simply add more drives to my desktop computer. None of these options seemed to address all my requirements very well. I eventually stumbled upon FreeNAS, a FreeBSD-based, network storage oriented operating system that uses the ZFS file system and a web interface to configure network sharing and other settings. While most of the setup and system management is done through this web interface, but you can extend the machine’s capabilities quite a bit through the terminal via SSH. In this article, I’ll go over my hardware selections, the build and configuration process, some of the other applications I have running on the machine, and a bit of theory about how ZFS allocates array storage space and how it can be tuned to reduce allocation overhead.

I want to make a quick note before diving into this excessively long article. I started writing the first few sections intending to create a detailed build log for my server. I took pictures and documented every step as well as I could; I had been planning this server over the span of several years, so I was understandably excited to get into it. As the article progressed, it started to shift into a combination of a build log and a tutorial. While the exact set of parts and the sequence of their assembly and configuration will likely be fairly unique to the machine I built, I hope that people undertaking a similar project will find helpful information in some portion of this article. My contact information is at the bottom of this article if you would like to get in touch with me for any reason.

[2017 Update:] I've had this server for a little over a year now and have made several changes to the configuration. Those changes include the following:

- Putting the sever in a proper rack

- Getting an NVMe SSD for virtual machines

- Installing another 8 drives in the front bays (along with another M1015)

- Installing an internally-mounted "scratch disk"

- Creating a front fan shroud for 3x 140mm fans to increase cooling performance

[2018 Update:] The server has been going strong for two years now. I've made some more changes to the system in the past year:

- Upgrading to 10GbE copper networking

- Replacing the internal "scratch disk" with 2x 2TB SATA SSDs shared via iSCSI

[2019 Update:] Three years! I've made some major changes this year to support the addition of a second 24-bay chassis:

- Replaced 3x M1015 cards with 1x LSI 9305-24i HBA

- Added external SAS HBA to support expansion chassis, LSI 9305-16e

- Added second 846 chassis for expansion

- Added 16 new 8TB HDDs

- Overhauled the fan control setup to support more fan zones

- Upgraded memory to 128 GB

- Added a second UPS to handle the increased power load

- Added a 280GB Optane 900p for an L2ARC

- Added cable management arms

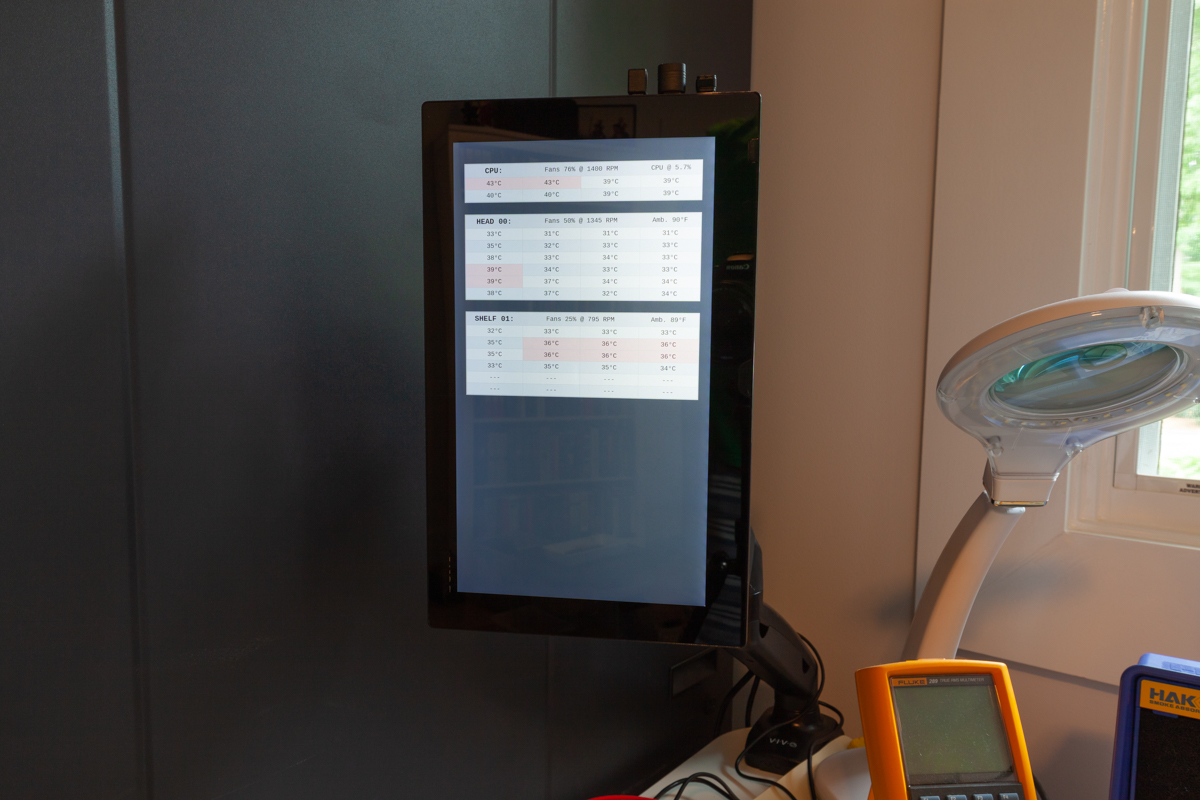

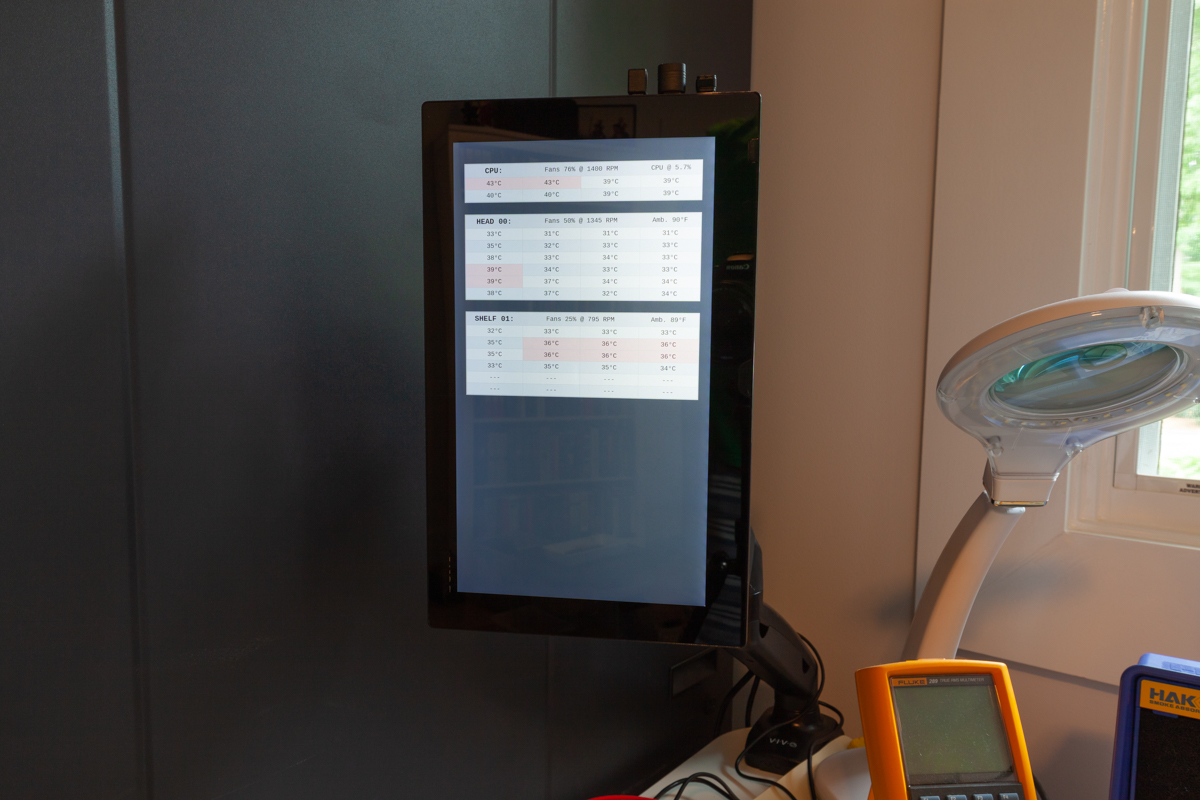

- Added external display to show live system temperature and fan speed data

[2020 Update:] I made a few minor upgrades through 2020:

- Added 8x 8TB disks to fill the second chassis

- Replaced all the 4TB disks with 8TB disks (several 4TB disks had failed)

- Replaced the CPU with a Xeon E5-2666 v3 (10C/20T @ 2.9GHz)

- Removed all the VM/bhyve stuff from the FreeNAS system and moved it onto a dedicated Proxmox host

[2021 Update:] I added another expansion shelf in 2021:

- Added a 36-bay SC847 chassis (24 bays in front, 12 in rear)

- Added 34x 8TB disks (4x additional 8-wide Z2 vdevs and 2 spares)

- Added 2x 2TB SSDs to the scratch disk pool

- Replaced the original 1500VA UPS

I've made updates to the original text to reflect these changes, marking paragraphs as updated where appropriate. The system summary section just below will always include whatever the latest configuration is. There are places where I've included original text from the article even though it's no longer applicable to my build; I've done so because in these cases, the old might be helpful (for example, info on preparing the Ikea LACK table to hold the server).

Contents:

- Background

- System Summary

- Photos

- Parts and Price List

- Parts Selection Details

- Build Process

- Flashing the M1015 HBA Cards & Installing FreeNAS

- Initial FreeNAS Configuration

- Calculating & Minimizing ZFS Allocation Overhead

- Setting Up Storage Volumes

- Further System Configuration

- Setting Up SMB Sharing

- Adjusting Permissions

- Setting Up iSCSI Sharing [2018 Update]

- Performing Initial bhyve Configuration

- Creating a bhyve VM

- Configuring rclone in a bhyve

- Expanding Beyond a Single Chassis [2019 Update]

- Closing and Summary

System Summary

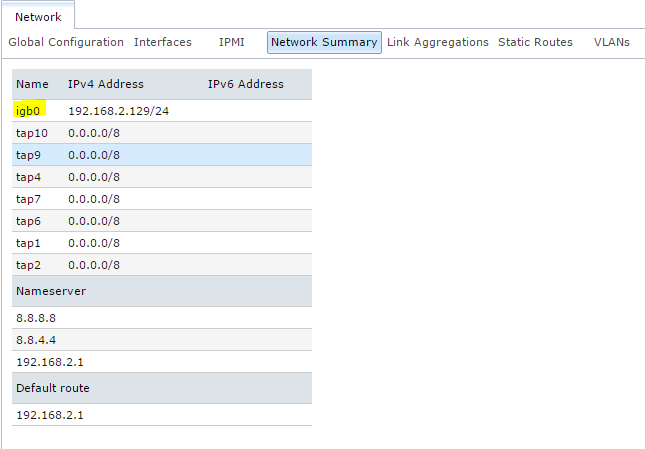

The server is running on FreeNAS 12.0 with 6x 8-drive RAID-Z2 virtual devices (vdevs) with 8TB disks for a total of ~250 TB of usable, redundant space. These drives in the main chassis (or "head unit") are connected to an LSI 9305-24i. The drives in the second chassis (or "expansion shelf") are connected with an LSI 9305-16e and a SAS3 expander backplane. The boot volume is stored on 2x mirrored Intel 540s 120 GB SSDs. I have an internally-mouted pair of 2 TB SATA SSDs striped together for ~4TB of fast, temporary data storage. The head unit is housed in a SuperMicro SC846 chassis with two 920W redundant PSUs; the expansion has the same. The system is built on a SuperMicro X10SRL-F LGA2011-v3 motherboard. I’m using an Intel Xeon E5-2666 v3 (10C/20T @ 2.9GHz) and 8 modules of 16GB DDR4 Samsung ECC/registered memory for a total of 128GB of RAM. I’m using a Noctua cooler on the CPU and I replaced the noisy stock chassis fans with quieter Noctua fans. I also created a front fan shroud that holds 3x 140mm fans to increase cooling while keeping noise levels down. I have one mounted on both the head unit and the expansion shelf. I have two APC 1500VA Smart-UPSs and have them and the file server in a 42U Dell rack cabinet from Craigslist.

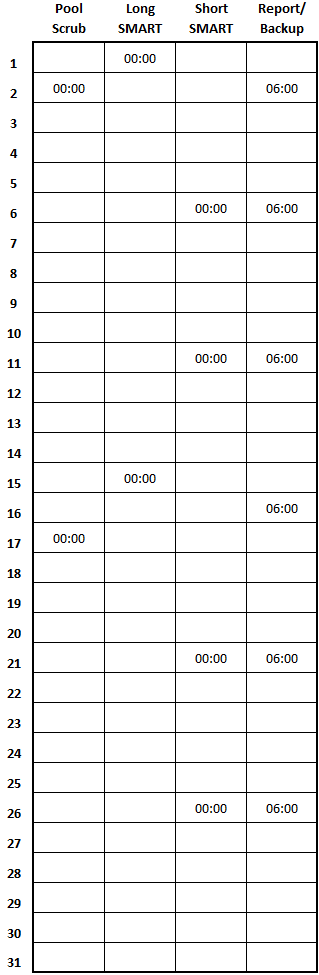

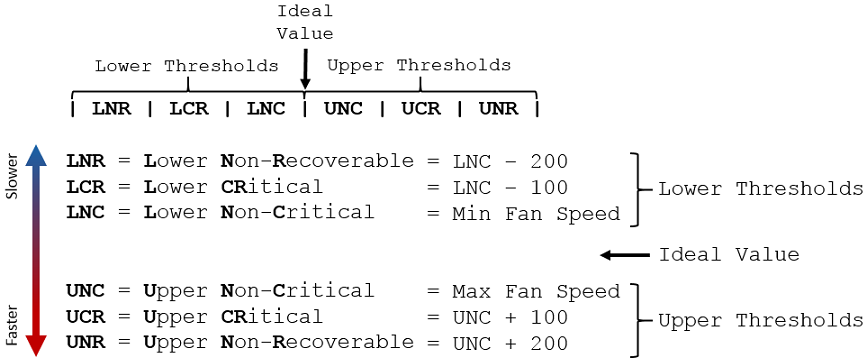

I set my primary dataset with recordsize = 1MiB to account for data block placement in an 8-drive Z2 array. Most of the data is shared via SMB/CIFS. I also have an iSCSI share on the main pool mounted on my desktop for my Steam library, and an iSCSI share on the mirrored SATA SSDs mounted in one of the VMs (and then reshared via SMB). The system hosts several different Debian-based bhyve VMs to run various services I use on a day-to-day basis (including nginx, pi-hole, irssi, an OpenVPN server, and an rclone client I use to back up my data to ~~the cloud~~). I have scripts set up to send SMART and zpool status reports to my email address on a weekly basis and scrubs/SMART checks scheduled every 2 weeks. I also have a script that automatically adjust the chassis fan speeds based on live HDD and CPU temps.

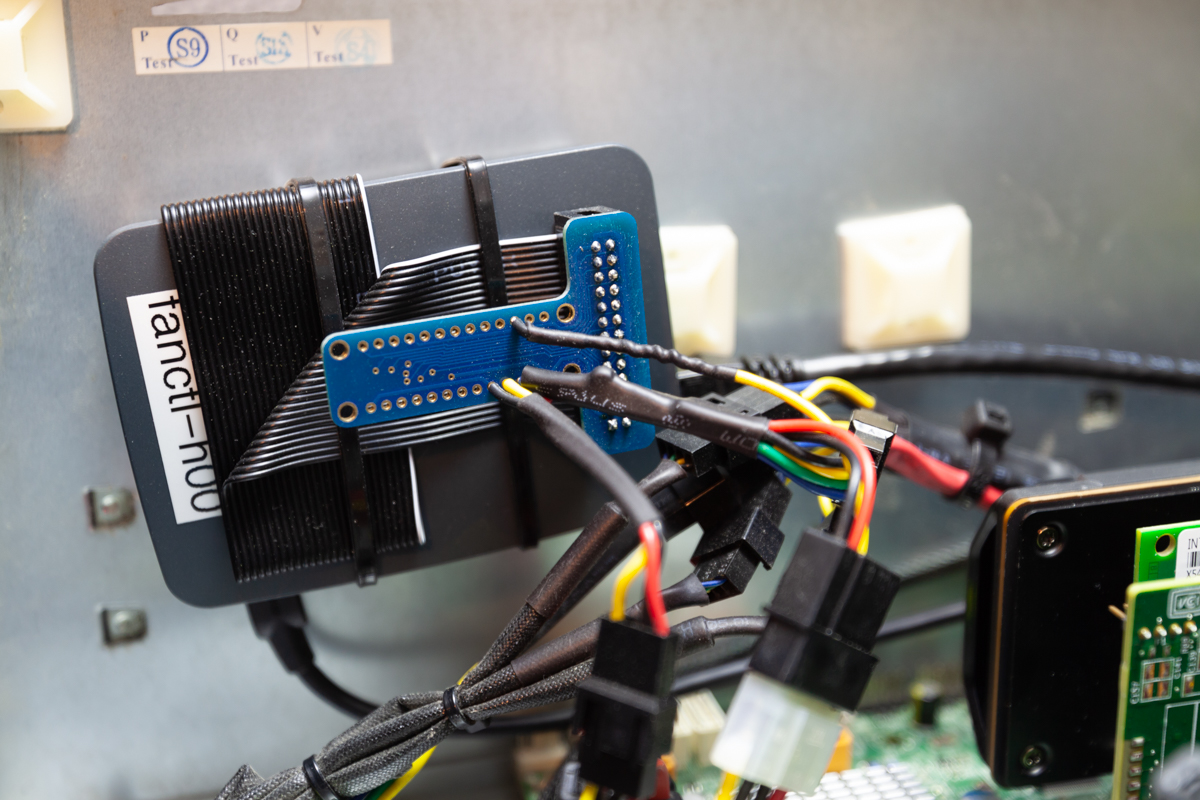

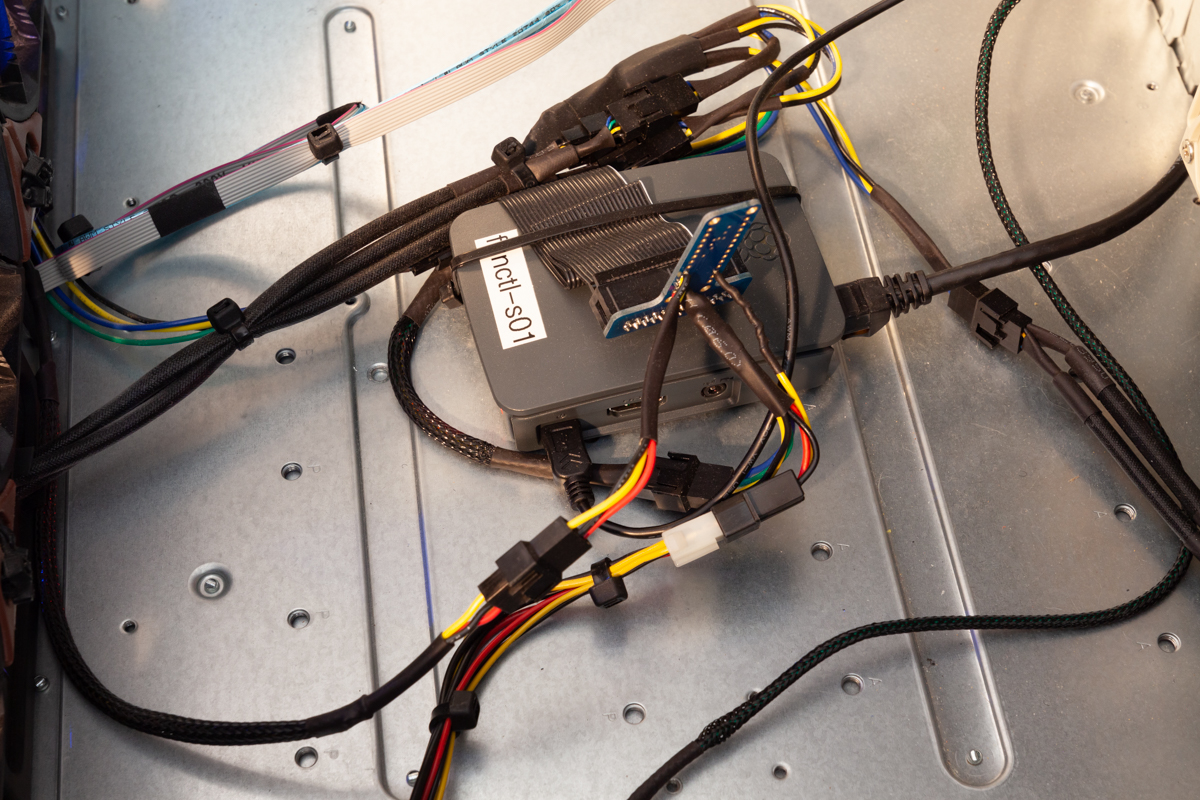

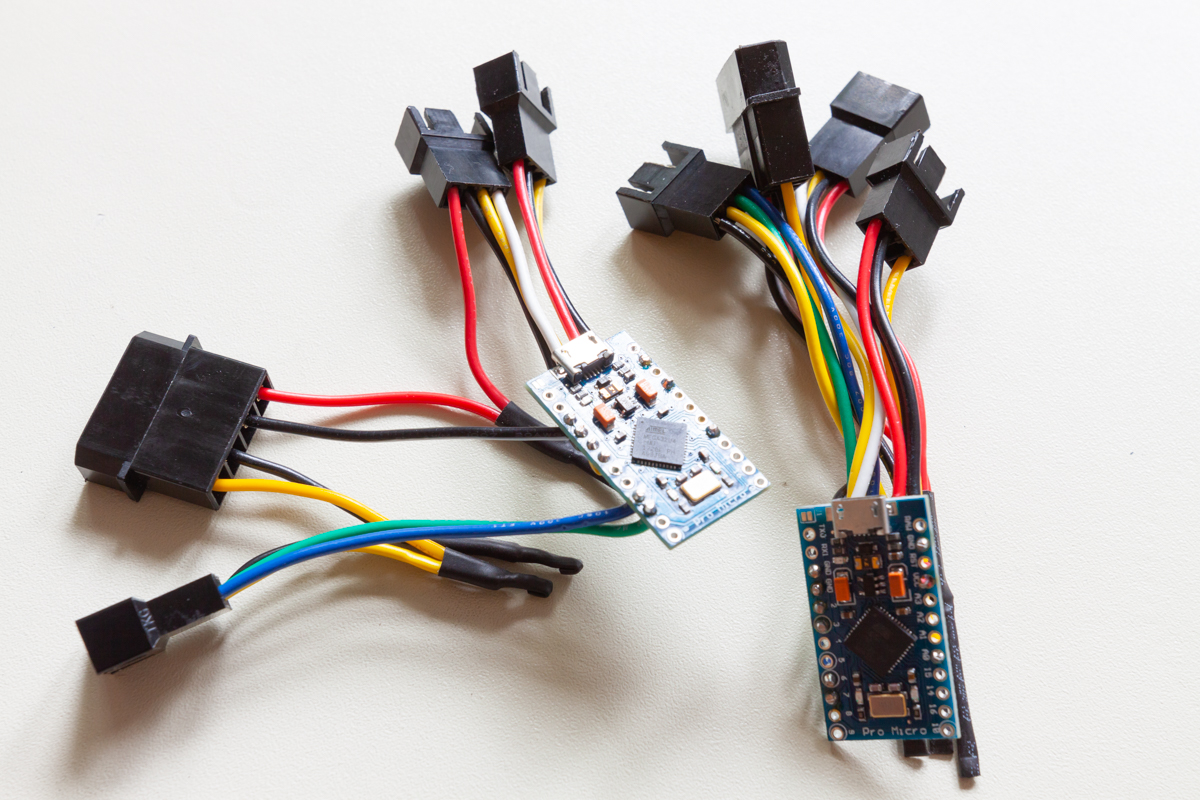

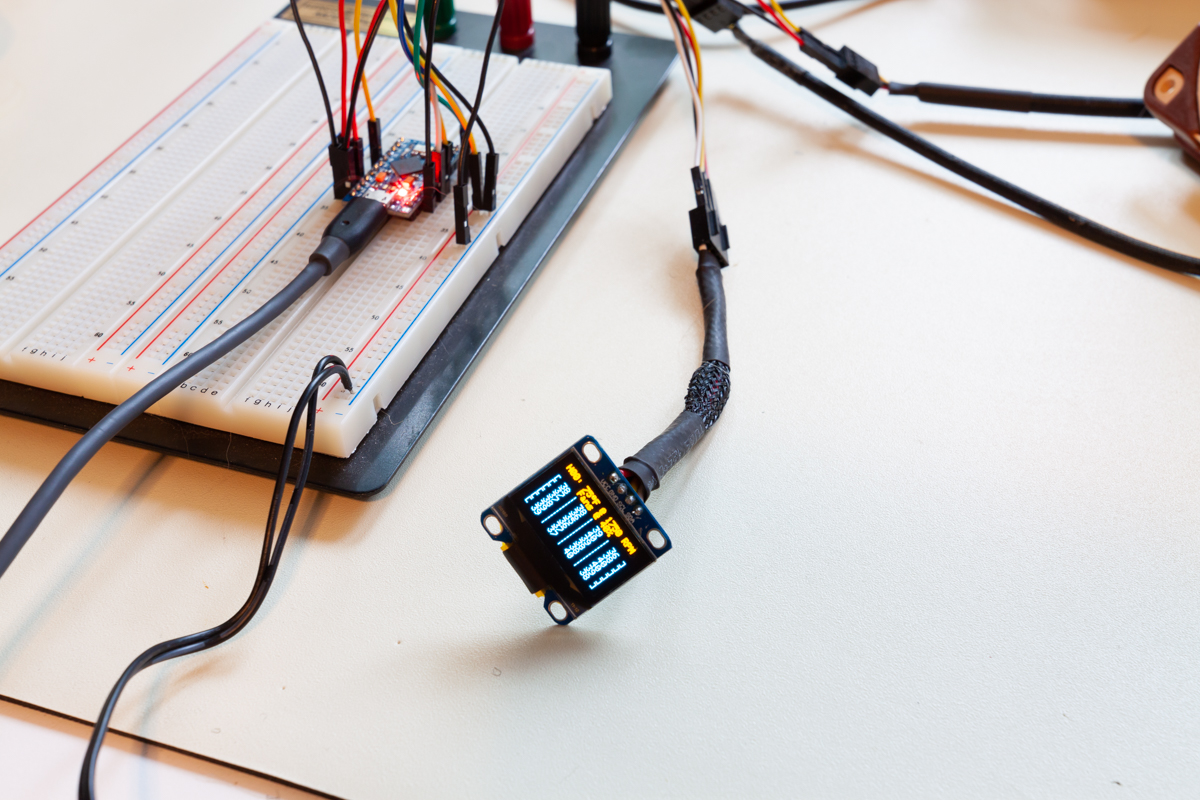

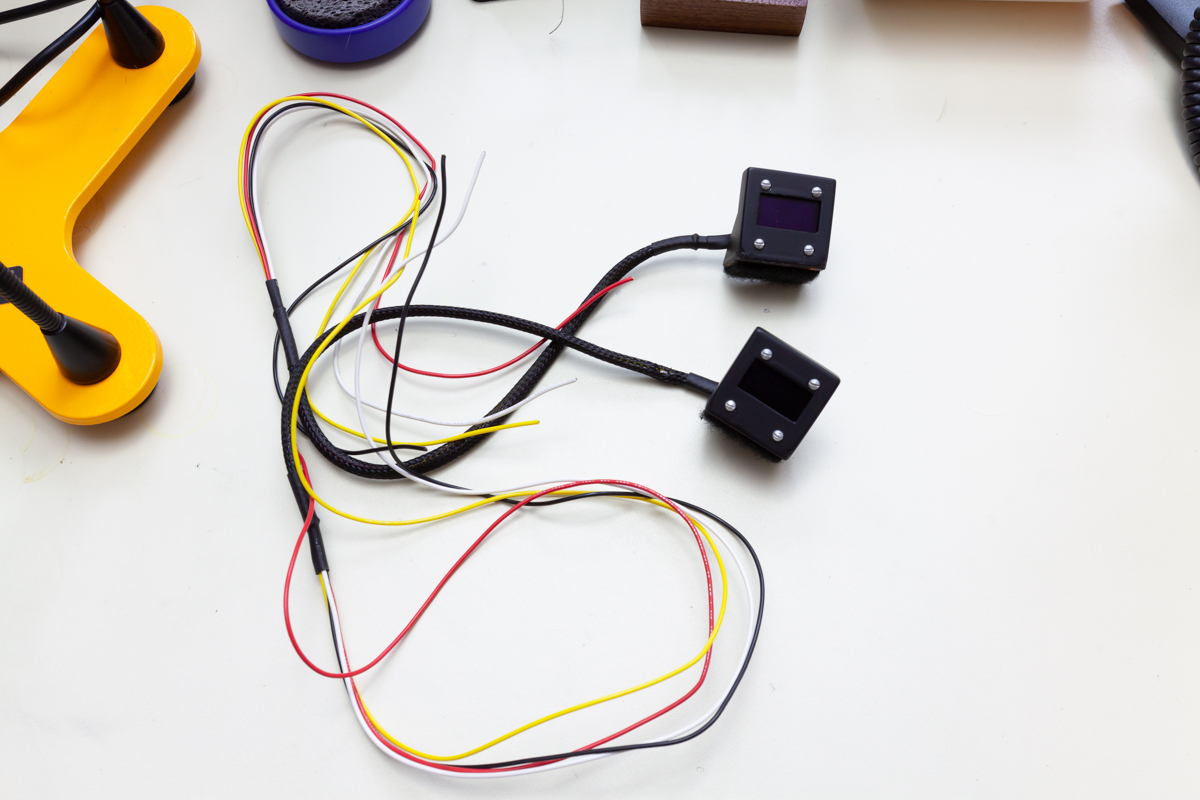

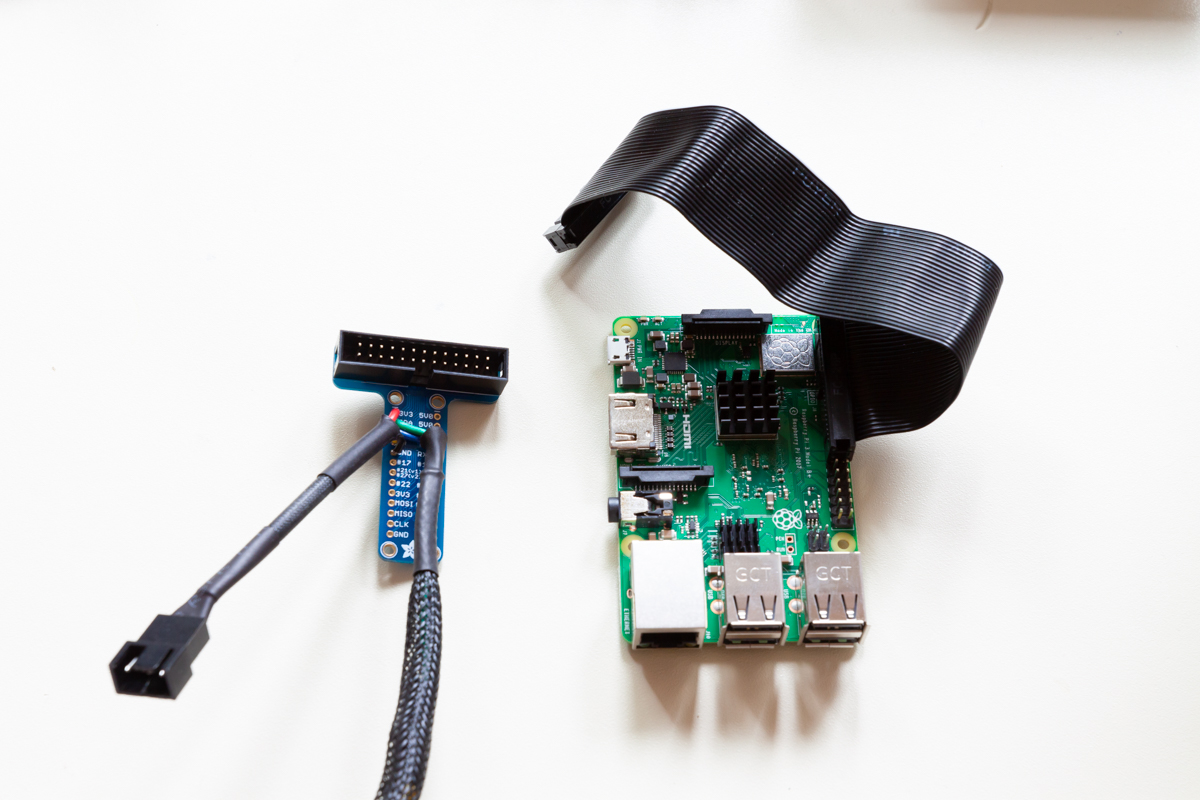

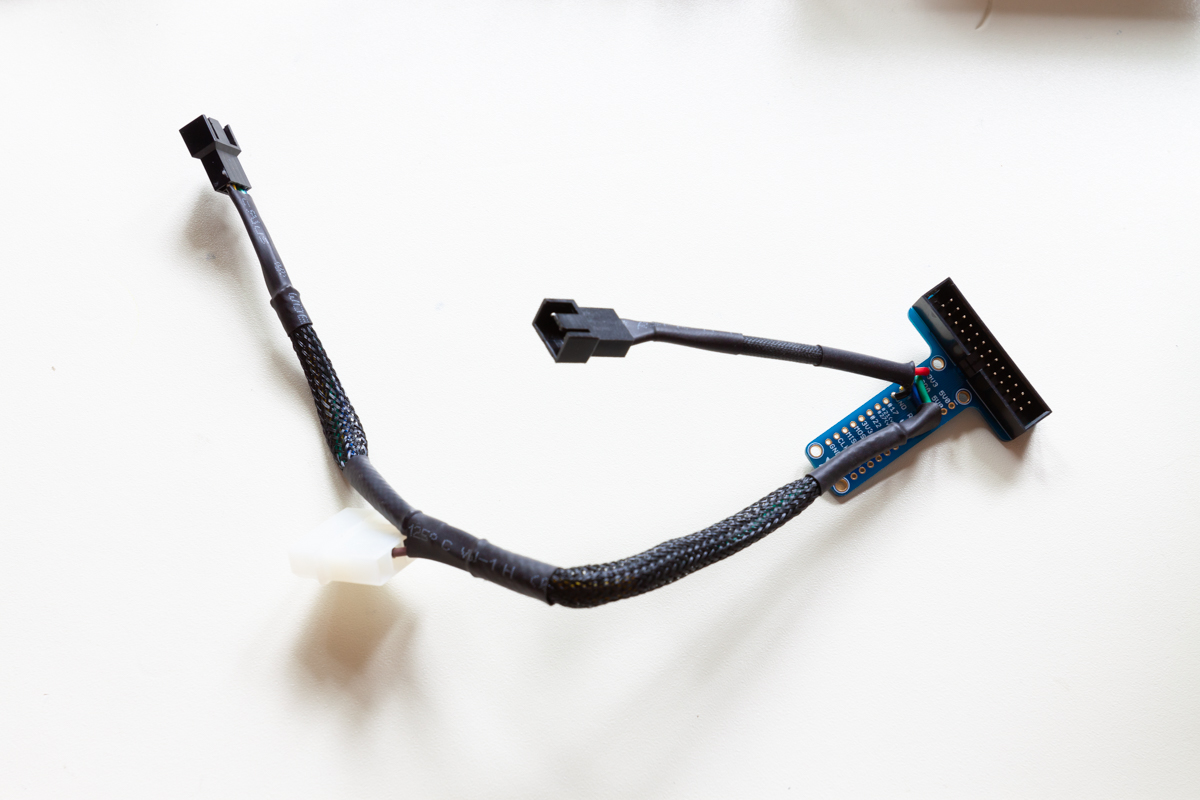

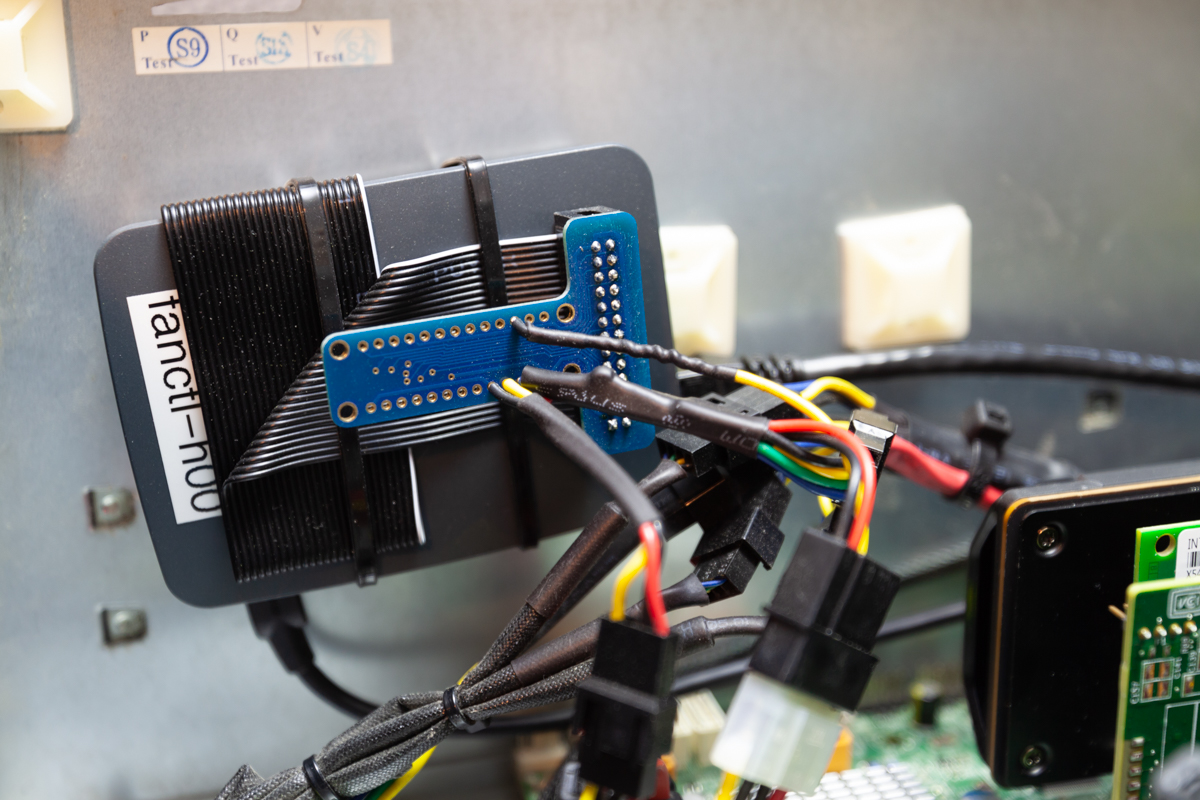

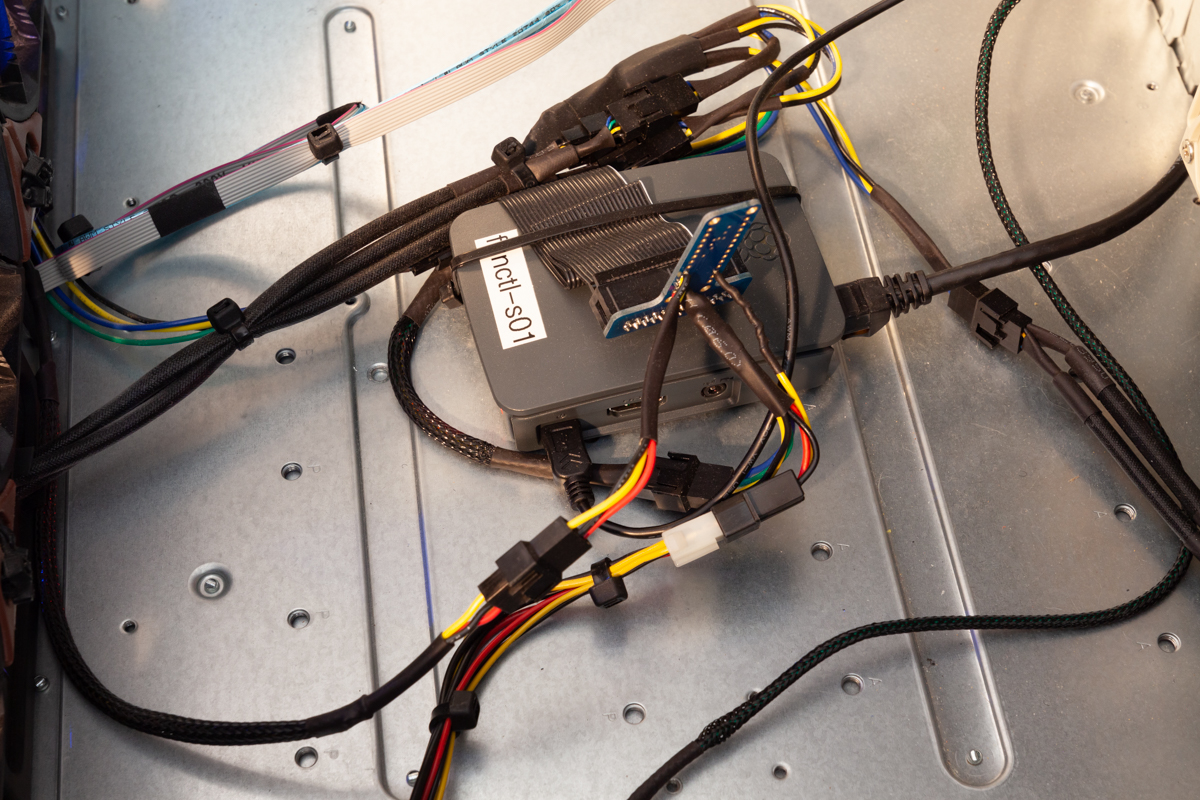

The fan control for each chassis is now handled through Raspberry Pis that output the appropraite PWM signal. I still have the script running on the FreeNAS system to monitor drive temperatures, but now instead of setting fan speeds by sending ipmitool commands, it sends the commands to the Raspberry Pi systems in each chassis via Python's sockets module. The Pis also measure fan speed and ambient chassis temperature which is all sent to another Pi running a webserver with flask, socket.io, and redis that displays lots of system vitals.

Of course the primary purpose of the NAS is data storage. The vast majority of the data I store is from the high-resolution photography and videography I've done over the past 10-15 years, most of which is stored in some sort of raw format. I could probably go through and delete about 95% (even 99%) of the data, but I would rather keep everything around so I can pretend that maybe some day, someone will be interested in looking at them (maybe I'll have very patient kids?). I look at this use case as a modern version of the boxes full photo albums and slides my parents and grandparents kept in their basement for decades and never looked at. Even if no one ever looks at my pictures and videos, it's still be a really fun project to work on. By the way, if you're interested in looking at some of my favorite photographs, you can find them here!

Photos

The front of the server with the front fan shroud on. The top chassis is the head unit, the lower chassis is the expansion shelf.

The front of the server with the front fan shroud removed (note the weather stripping tape around the edges).

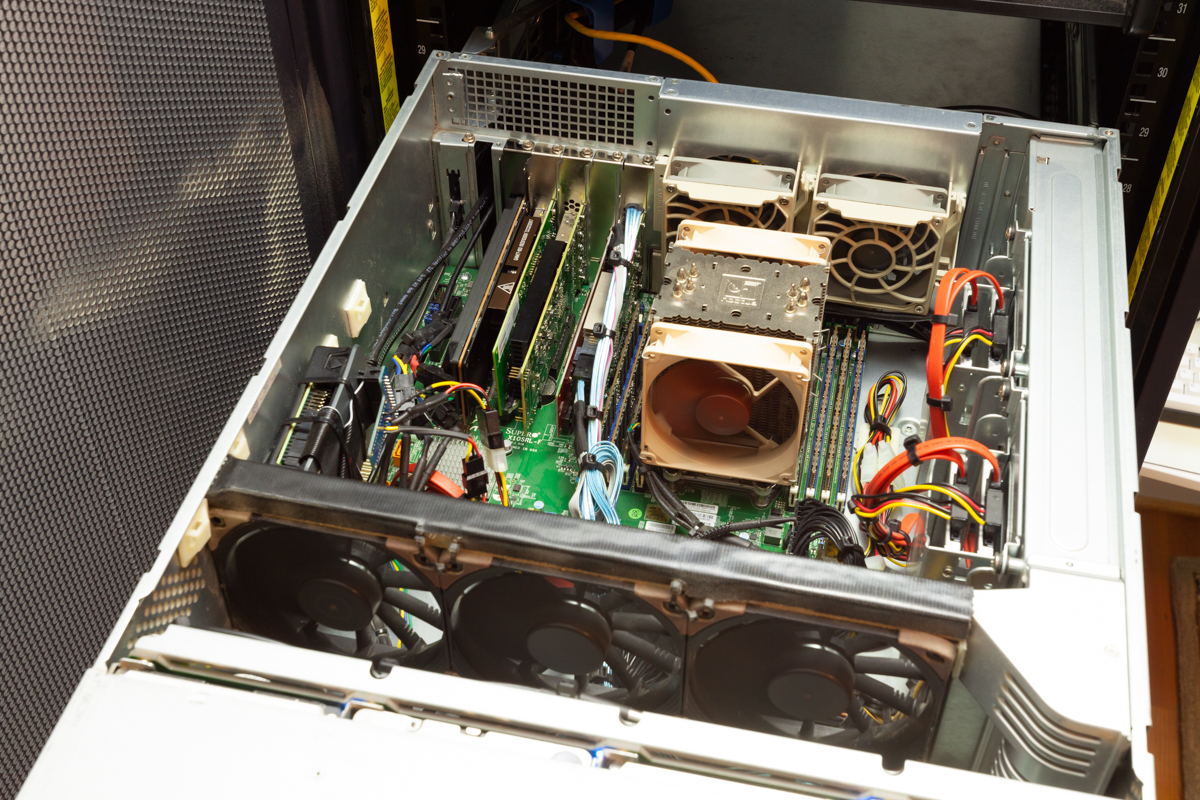

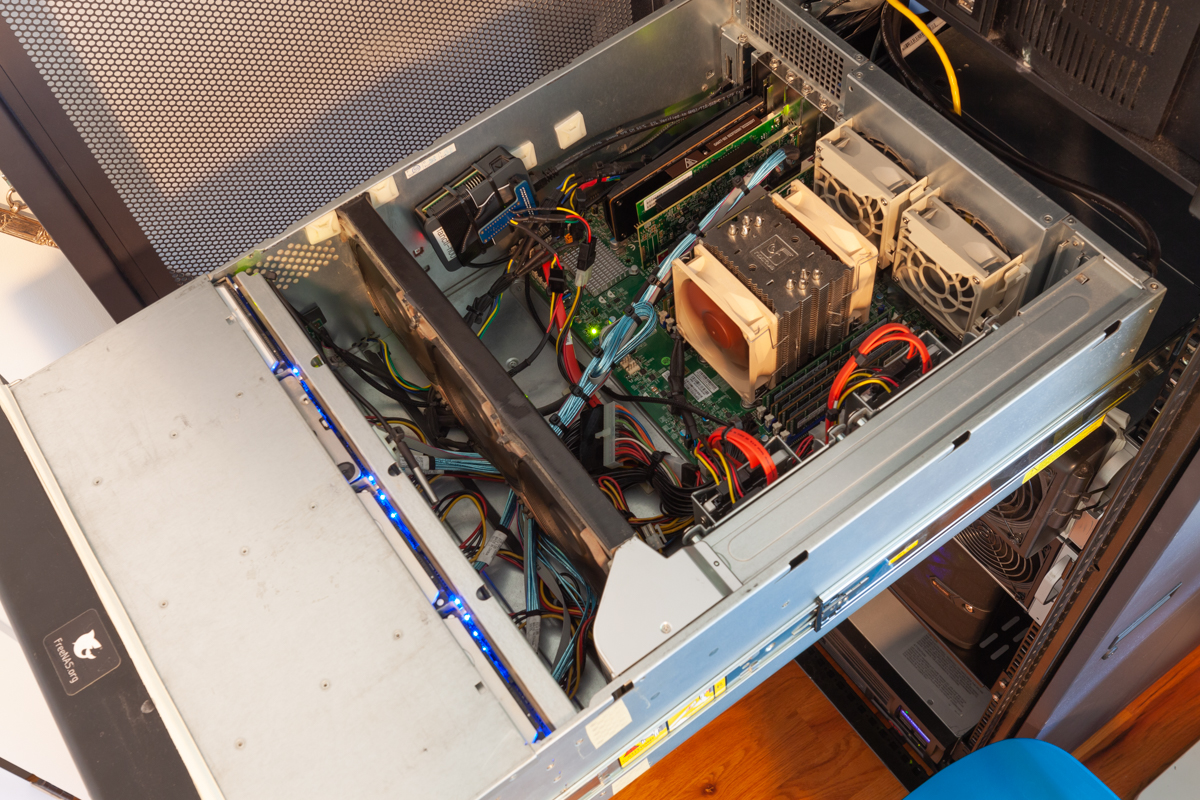

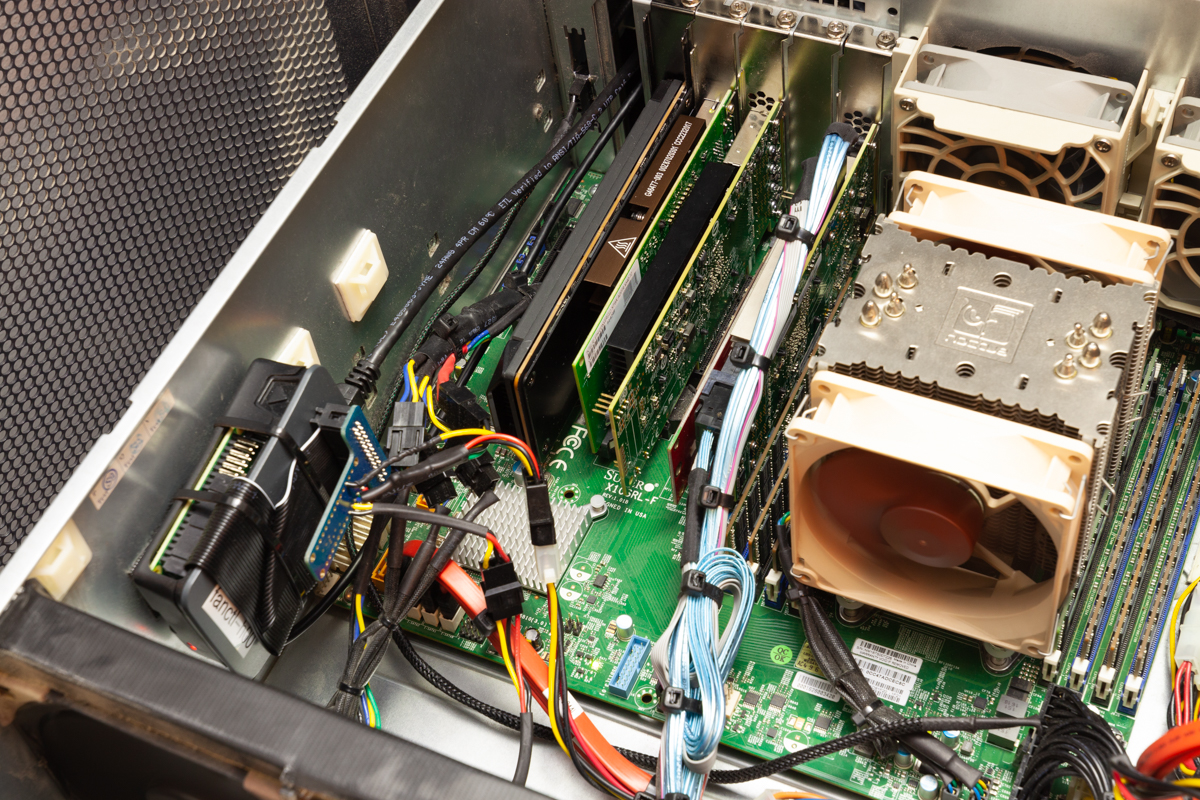

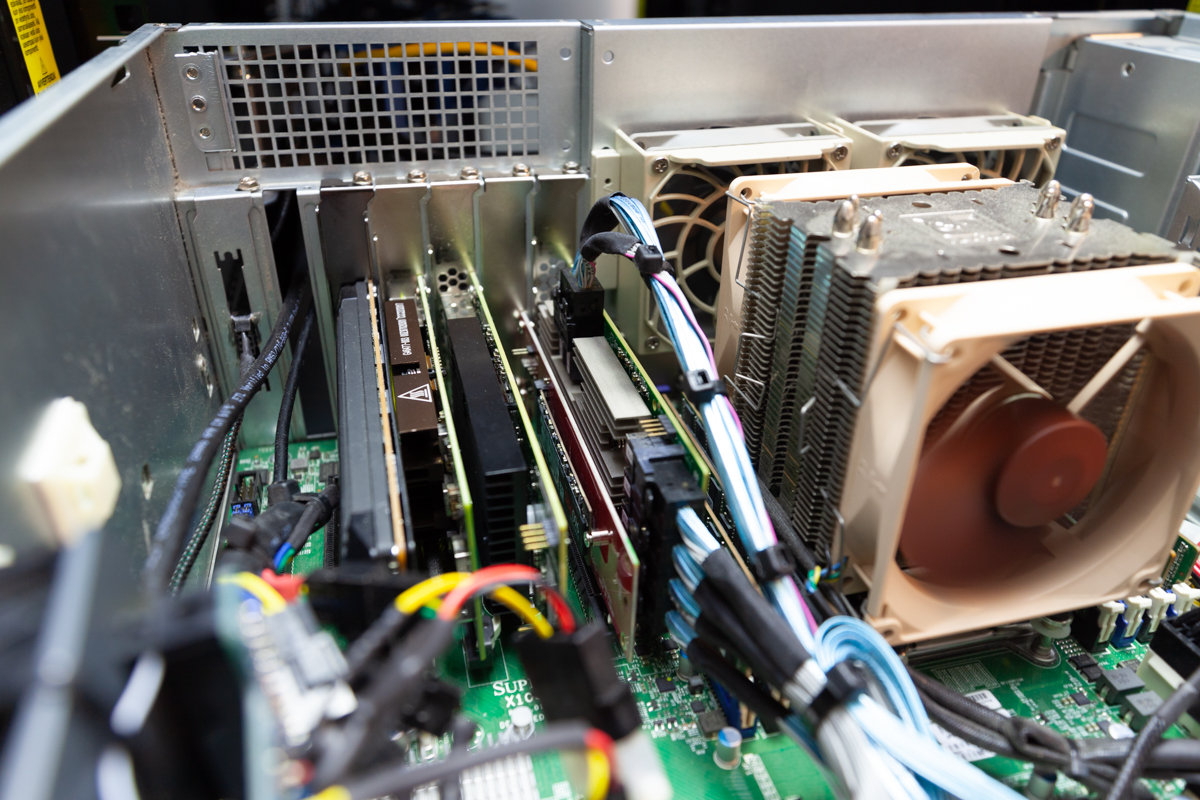

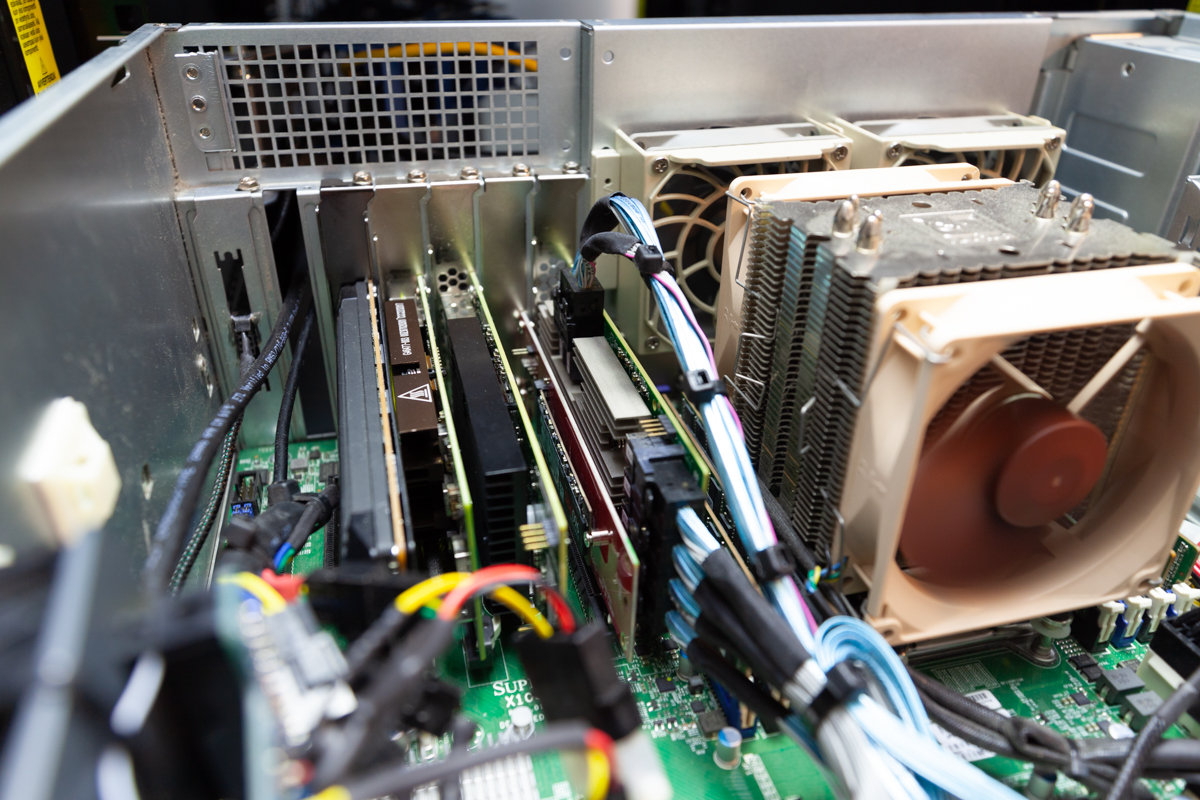

A few different views of the inside of the head unit.

A view of the fan wall, secured with zip ties. The strip on top of the fan wall helps to seal off the two sections of the chassis, making sure air flows through the drive bays rather than back over top of the fan wall.

Inside of the expansion shelf chassis.

All expansion shelf drives connected via a single SAS3 cable.

The fan control Raspberry Pi in the head unit and in the shelf.

The dedicated 11" display for system stats, including drive temperatures, ambient chassis temp, CPU temp and load, and fan speeds.

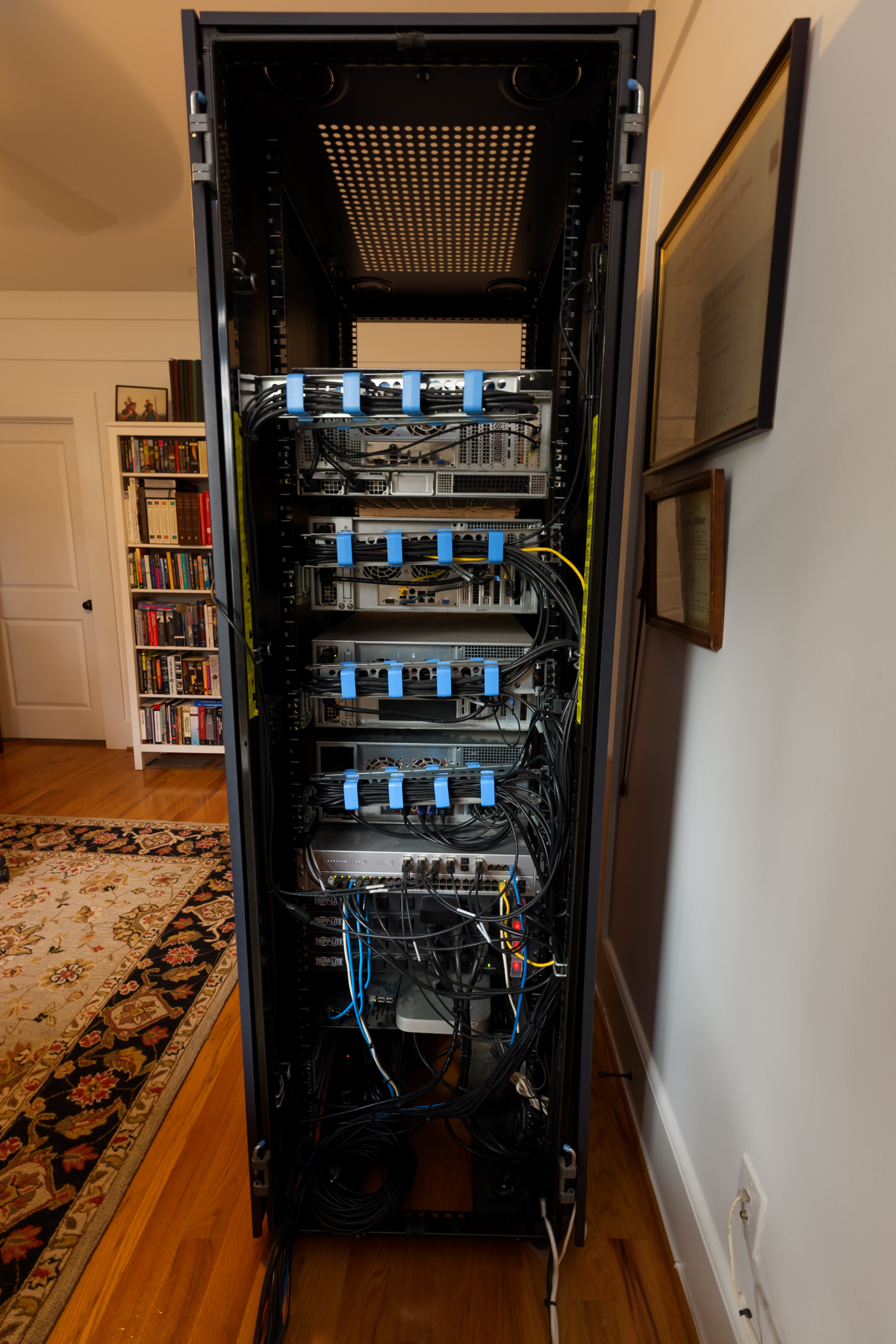

A view of the front and back of the rack. From top to bottom, I have my Proxmox server, the FreeNAS head unit, the expansion shelf, my new workstation, a FreeNAS mini, a UniFi NVR, and some UPSs.

The whole rack rolls out several feet so I can get behind it when needed.

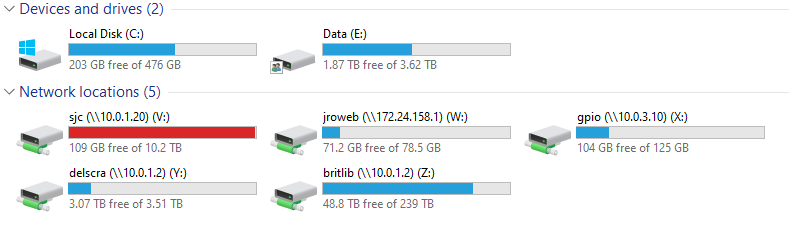

Here is a screenshot of the mounted shares.

Parts and Price List

[2019 Update:] Now that the system has gone through so many changes, I've decided to restructure this table to group things based on the yearly upgrades. Parts that have been functionally replaced in a later upgrade will be marked with strikethrough text. These replaced parts are still included in the price totals.

| Part | Make/Model | Qty | $ Per | $ Total | From |

|---|---|---|---|---|---|

| Original Build (2016) | |||||

| Chassis | SuperMicro SC846 | 1 | $200 | $200 | TheServerStore |

| Motherboard | SuperMicro X10SRL-F | 1 | $272 | $272 | Amazon |

| RAM | Samsung M393A2G40DB0-CPB (16GB) | 4 | $80 | $320 | Amazon |

| PSU | SuperMicro PWS-920P-SQ | 2 | $118 | $236 | eBay |

| Backplane | SuperMicro BPN-SAS-846A | 1 | $250 | $250 | eBay |

| Data Drive | WD 8 TB Red (8 + 1 Spare) | 9 | $319 | $2,871 | Amazon |

| Data Drive | WD 4 TB Red (Already Owned) | 8 | $0 | $0 | - |

| Boot Device | Intel 540s 120GB SSD | 2 | $53 | $105 | Amazon |

| CPU Cooler | Noctua NH-U9DXi4 | 1 | $56 | $56 | Amazon |

| 120mm Fan | Noctua NF-F12 iPPC 3000 PWM | 3 | $24 | $72 | Amazon |

| 80mm Fan | Noctua NF-R8 PWM | 2 | $10 | $20 | Amazon |

| UPS | APC SUA1500RM2U Smart-UPS | 1 | $300 | $300 | eBay |

| SAS Cable | SFF-8087 to SFF-8087 | 4 | $11 | $44 | Amazon |

| HDD Screws | SuperMicro HDD Screws (100 ct) | 1 | $8 | $8 | Amazon |

| Tax, Misc. Cables, etc. | Tax, misc. | 1 | $250 | $250 | - |

| SSD Cage | SuperMicro MCP-220-84603-0N | 1 | $25 | $25 | eBay |

| Original System Total: | $5,528 | ||||

| 2017 Update | |||||

| Data Drive | WD 8 TB Red (from WD EasyStore) | 8 | $130 | $1,040 | Best Buy |

| Front Fan Shroud | (3D Printed PLA) | 1 | $180 | $180 | 3DHubs |

| 140mm Fan | Noctua NF-A14 iPPC 3000 PWM | 3 | $25 | $75 | Amazon |

| Rack Cabinet | Dell 42U | 1 | $300 | $300 | Craigslist |

| Server Rails | Supermicro MCP-290-00057-0N | 1 | $75 | $75 | eBay |

| 2017 Update Total: | $2,207 | ||||

| 2018 Update | |||||

| 10GbE NIC | Intel X540T2 | 1 | $248 | $248 | Amazon |

| SSD Cage | SuperMicro MCP-220-84603-0N | 1 | $25 | $25 | eBay |

| SSD Scratch Disks | Micron MX500 2 TB | 2 | $339 | $678 | Amazon |

| 2018 Update Total: | $976 | ||||

| 2019 Update | |||||

| Data Drive | WD 8 TB Red (from WD EasyStore) | 16 | $130 | $2,080 | Best Buy |

| Expansion Chassis | SuperMicro SC846 | 1 | $250 | $250 | TheServerStore |

| Expansion PSU | SuperMicro PWS-920P-SQ | 2 | $100 | $200 | TheServerStore |

| Server Rails | Supermicro MCP-290-00057-0N | 1 | $100 | $100 | TheServerStore |

| Expansion Backplane | SuperMicro BPN-SAS3-846EL1 | 1 | $630 | $630 | eBay |

| Additional RAM | Samsung M393A2G40DB0-CPB (16GB) | 4 | $151 | $604 | Newegg |

| L2ARC Drive | Intel Optane 900P 280GB AIC | 1 | $133 | $133 | Amazon |

| Internal HBA | LSI SAS 9305-24i | 1 | $570 | $570 | Amazon |

| External HBA | LSI SAS 9305-16e | 1 | $550 | $550 | Amazon |

| Front Fan Shroud | (3D Printed PLA) | 1 | $180 | $180 | 3DHubs |

| 140mm Fan | Noctua NF-A14 iPPC 3000 PWM | 3 | $25 | $75 | Amazon |

| 120mm Fan | Noctua NF-F12 iPPC 3000 PWM | 3 | $24 | $72 | Amazon |

| 80mm Fan | Noctua NF-R8 PWM | 2 | $10 | $20 | Amazon |

| SAS Ext. to Int. Adapter | Supermicro AOM-SAS3-8I8E | 1 | $80 | $80 | Supermicro Store |

| SAS3 Ext. Cable | SuperMicro CBL-SAST-0573 | 1 | $60 | $60 | Supermicro Store |

| SAS3 Int. Cable | SuperMicro CBL-SAST-0593 | 1 | $15 | $15 | Supermicro Store |

| SAS3 to SAS2 Cable | SuperMicro CBL-SAST-0508-02 | 6 | $13 | $78 | Supermicro Store |

| Cable Management Arm | SuperMicro MCP-290-00073-0N | 2 | $56 | $112 | Supermicro Store |

| UPS | APC SUA1500RM2U Smart-UPS | 1 | $300 | $300 | eBay |

| Dual PSU Adapter | Generic Amazon Model | 1 | $7 | $7 | Amazon |

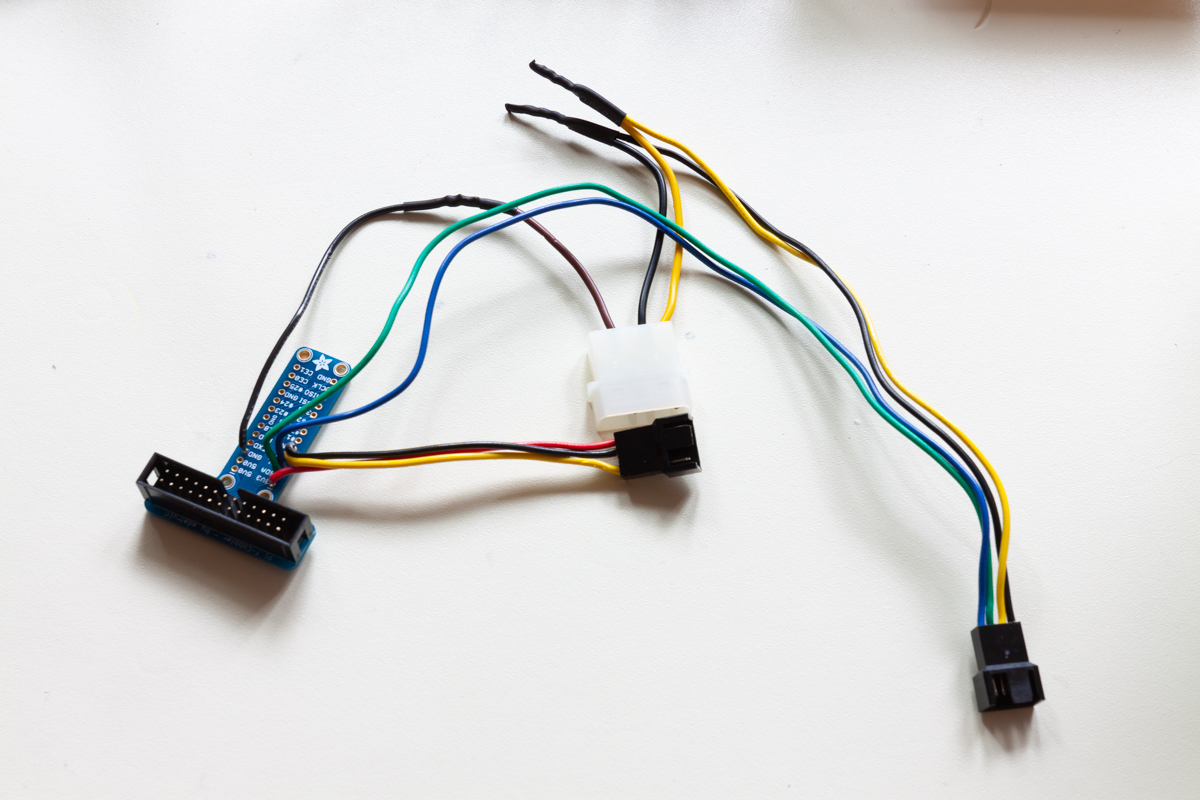

| Rasp. Pi + SD Card | RPi Model B+, 32GB MicroSD Card | 3 | $45 | $135 | Amazon |

| Thermal Probes | Aideepen 5pc DS18B20 | 1 | $12 | $12 | Amazon |

| System Vitals Display | 11" 1080p Generic Touchscreen | 1 | $159 | $159 | Amazon |

| Arm for Display | VIVO VESA Arm | 1 | $32 | $32 | Amazon |

| 2019 Update Total: | $6,454 | ||||

| 2020 Update | |||||

| Data Drive | WD 8 TB Red (from WD Elements) | 13 | $130 | $1,690 | B&H |

| CPU | Intel Xeon E5-2666 V3 | 1 | $190 | $190 | eBay |

| 2020 Update Total: | $1,880 | ||||

| 2021 Update | |||||

| Data Drive | WD 8 TB Red (from WD EasyStore) | 34 | $140.00 | $4,760.00 | Best Buy |

| Expansion Chassis | Supermicro SC847 | 1 | $701.00 | $701.00 | TheServerStore |

| Front Backplane | Supermicro BPN-SAS3-846EL1 | 1 | - | - | TheServerStore |

| Rear Backplane | Supermicro BPN-SAS3-826EL1 | 1 | - | - | TheServerStore |

| PSUs | Supermicro PWS-1K28P-SQ | 2 | - | - | TheServerStore |

| Rails | Supermicro MCP-290-00057-0N | 1 | - | - | TheServerStore |

| SAS3 Int. Cable | SuperMicro CBL-SAST-0593 | 2 | - | - | TheServerStore |

| Front Fan Shroud | (3D Printed PLA) | 1 | $339.00 | $339.00 | 3DHubs |

| SAS3 Ext. Cable | Supermicro CBL-SAST-0677 | 2 | $90.00 | $180.00 | eBay |

| SAS Ext. to Int. Adapter | Supermicro AOM-SAS3-8I8E | 1 | $45.00 | $45.00 | eBay |

| 140mm Fan | Noctua NF-A14 iPPC 3000 PWM | 3 | $28.00 | $84.00 | Amazon |

| 120mm Fan | Noctua NF-F12 iPPC 3000 PWM | 3 | $26.00 | $78.00 | Amazon |

| 80mm Fan | NF-A8 PWM | 10 | $16.00 | $160.00 | Amazon |

| PWM Fan Splitter | 5-Way Fan Hub | 2 | $7.00 | $14.00 | Amazon |

| PSU Adapter | Thermaltake Dual 24-Pin | 1 | $11.00 | $11.00 | Amazon |

| 2.5" Tray Adapter | Supermicro MCP-220-00118-0B | 2 | $24.00 | $48.00 | eBay |

| SSD Scratch Disks | Micron MX500 2 TB | 2 | $200.00 | $400.00 | Amazon |

| Cable Management Arm | SuperMicro MCP-290-00073-0N | 1 | $56.00 | $56.00 | Supermicro Store |

| Replacement UPS | APC SMT1500RM2U | 1 | $230.00 | $230.00 | eBay |

| UPS Battery Pack | APC APCRBC133 | 1 | $260.00 | $260.00 | APC Store |

| 2021 Update Total: | $7,366 | ||||

| Grand Total: | $24,411 | ||||

Parts Selection Details

My primary objectives when selecting parts were as follows:

- Allow for up to 24 drives,

- Be able to saturate a gigabit ethernet line on SMB/CIFS

- Have a quiet enough system that it can sit next to me in my office

Redundancy and overall system stability were also obvious objectives and led me to select server-grade components wherever appropriate. Here’s a breakdown of the reasoning behind each part I selected:

Chassis: SuperMicro SC846 – I purchased this used on eBay for $200 shipped. It retails for close to $1000, so it’s a pretty good deal. I considered the Norco RPC-4224 for a while, but the SuperMicro chassis is much higher quality and has better thermal design (i.e. bottomless HDD sleds for better airflow). The specific chassis I purchased came with an older version of the SC846 backplane that doesn’t support larger-capacity volumes, so I had to buy a different backplane. The PSUs the chassis came with are really loud, so I purchased some quieter ones. The stock system fans are also loud, so I replaced those too. More information on the replacement backplane, PSUs, and fans below. I currently have 8 open drive bays to allow for future expansion. [2019 Update:] For my big system expansion, I picked up a second 846 chassis on eBay. More details on this expansion are below.

Motherboard: SuperMicro X10SRL-F – This is SuperMicro’s basic LGA2011 server board. An LGA1151-based board would have worked

but the SC846 chassis doesn’t have mounting holes for micro ATX boardsand the full ATX versions of SuperMicro’s LGA1151 boards are very expensive (Update: apparently the 846 does have micro ATX mounting holes, so I could have saved a fair bit of coin there. Oh well... thanks /u/techmattr on reddit for pointing this out.) LGA2011 will also allow me to add more RAM if I ever need to.CPU: Intel Xeon E5-1630v3 – With 4 cores/8 threads at 3.7GHz, this has the highest single core clock speed in this family of Xeons, which is really nice for SMB/CIFS. I had to get it on SuperBiiz because it’s typically only sold to systems integrators, but it was a painless experience despite my initial misgivings. [2020 Update:] The old 4C/8T Xeon was not happy during scrubs with 48 disks on the system. I picked up an E5-2666 V3 to replace it, with is a custom Amazon SKU that I found for a very reasonable price on eBay.

RAM: Samsung M393A2G40DB0-CPB (4x16GB, 64GB total) – This is one of the SuperMicro-recommended RAM models for the X10SRL-F motherboard; all the other models are really hard to find. I went with ECC RAM because scary cosmic radiation (and because the FreeNAS community convinced me ECC was an absolute requirement). See this interesting article on more information on ZFS and the supposed dire need for ECC RAM. The RAM is also registered/buffered because the DIMM size is so large. 64GB is on the high side, but 32GB is the next step down and that would have been cutting it close. I shouldn't have to add more RAM when I eventually fill the last 8 HDD bays in my chassis [2019 Update:] I added another 64GB of RAM to support the expansion, bringing the total to 128GB.

Host Bus Adapter (HBA): IBM M1015 – These cards are flashed to IT mode so the FreeNAS OS has direct access to the drives for SMART data, etc. Each card handles 8 drives and I’ve got room for another card if (see: when) I need to populate the last 8 bays in the chassis. [2017 Update:] The time for another 8 drives came after all! I picked up a 3rd M1015 when I got my new drives. [2019 Update:] As part of the system expansion, I needed to consolidate PCIe cards. Part of that consolidation was replacing the 3x M1015's with a single LSI 9305-24i which can handle all 24 of the head-unit drives via my direct attach backplane. For the expansion shelf, I added an LSI 9305-16e. It's connected to an expander-based backplane in the shelf, so a single one of the HBA's four ports can handle all 24 drives in the shelf.

Data Drives: Western Digital Red (4TB & 8TB) – I was already running the 8x 4TB drives in my desktop, so I initially built the NAS around 8x 8TB drives, moved the data from the 8x 4TB drives in my desktop to the NAS, then moved the 8x 4TB drives into the NAS and expanded the pool. I bought a spare drive in 4TB and 8TB to have on hand in case of a failure. People like WD Red for NAS, but HGST would have been a good option too. I’ve found that the 8TB WD Red drives tend to run much hotter than the 4TB WD Reds. [2017 Update:] I added 8 more WD Red 8TB drives to the pool. I got them from Best Buy's Thanksgiving sale for $130 each. They're sold as external drives (WD EasyStore), but it's pretty easy to "shuck" them to get at the drive within. Most of the drives I got have white labels on them, but they're otherwise identical to WD Reds (same RPM and capabilities). I also got a 9th drive to serve as a "scratch" disk that I installed in a mount inside the chassis. I use this drive to store data that doesn't need to be stored on the main pool with redundancy (temp files, dev/test stuff, etc). [2018 Update:] I removed the scratch disk and replaced it with a pair of 2 TB SATA SSDs; see below for details. [2019 Update:] 16 more drives added in the expansion shelf.

[2020 Update:] I added another vdev worth of disks to the expansion shelf (8x 8TB) and replaced all the remaining 4TB disks with 8TB disks. Several of the 4TB disks had failed so I only had 4 or 5 left alive in the pool. I decided it was time to replace them as the ones that were left alive had like 9 years of run time on their SMART stats. The replacement process was very painless as I had multiple free bays (I hadn't installed the 8x new 8TB disks yet). I followed the steps here and found I could run the replace operation on all the disks in parallel. After the operation had finished, my pool automatically expanded.Power Supply Unit (PSU): SuperMicro PWS-920P-SQ – These are the 920W redundant PSUs for the SC846 chassis and are much quieter than the stock 900W PSUs that came pre-installed in my SC846. I got them new/open box from eBay for $120 each. I guess the “-SQ” stands for “super quiet”? SuperMicro doesn’t state anywhere in any of their documentation or sales material that these are a quieter option; they don’t even give acoustic dB level ratings for their PSUs. I found out about these PSUs after reading user reviews on Amazon and Newegg. Whatever, they’re really quiet despite the lack of supporting marketing material. [2019 Update:] Obviously, the shelf needs power too! Got another 2 PSUs for it.

10GbE NIC: Intel X540T2 [2018 Update] – This is a 10GbE copper network interface card from Intel with 2 RJ45 ports on it. With this upgrade, I also got a matching NIC for my desktop computer and a Netgear switch with 2x 10GbE ports on it. Eventually, I want to expand the 10G capabilities of my home network to some other machines, but the gear is still pretty expensive. Note that with this 10G network card, there were all sorts of network-related tunables I had to add to FreeNAS to get everything running a full-speed. More notes on that below.

Backplane: SuperMicro BPN-SAS-846A – The SuperMicro SC846 backplane lineup is very confusing, so a high-level overview of the more common SC846 backplanes might be helpful:

BPN-SAS-846EL1 - This is the backplane that came in my server, but it’s listed as end-of-life (EOL) by SuperMicro. It has a built-in SAS expander chip but it isn’t SAS2 capable so the maximum total capacity of the array is limited (to exactly what capacity, I am not sure). In other words, you might be able to populate 24 * 2TB drives and see all 48TB, but if you try 24 * 4TB drives, it might only see 60TB. I have no idea what the actual limitations are; these numbers are purely arbitrary examples.

BPN-SAS-846A - This is the backplane I purchased. It’s basically a SAS breakout cable baked into a PCB, so no expander chip to potentially cap the total array capacity. It has 6 mini-SAS ports on the back, each of which directly connects to 4 hard drives.

BPN-SAS2-846TQ - This backplane has 24 individual SATA ports directly connected to the 24 drives on the other side of the board. It’s very simple and a decent option, but cabling can be messy. These can also be found at a reasonable price on eBay.

BPN-SAS2-846EL1 - This is the SAS2-capable expander-based backplane. This is usually reasonably priced and would have been a good option in my build, but I had a hard time finding one on eBay when I was doing all my purchasing. If it has a maximum total array capacity, it’s large enough that you shouldn’t have to worry about it. With this backplane, you would only need to use one port on a single M1015 card and the backplane would expand that single connection out for all 24 hard drives. However, this would cause a slight bottleneck with most platter drives (you would get ~24Gb/s on the SAS link, so roughly 1Gb/s or 125MB/s per drive). I’ve seen some people on forums claim that you can connect two HBA cards to this backplane to double the total bandwidth to 48Gb/s, but this is not documented anywhere by SuperMicro; they say the other two mini-SAS ports are only for cascading with other storage systems.

BPN-SAS2-846EL2 - The same as the above -846EL1, but with a second expander chip to support failing over to a redundant (set of) HBA card(s). These tend to be $600+ on eBay when you can find them.

BPN-SAS3-846EL1 & -846EL2 - The same as the above two items, but with a SAS3 capable expander chip (or 2 chips in the case of the -846EL2).

I’ll also note here the equally-confusing SC846 chassis model numbers are based on the backplane they include and their PSUs. You can double-check this by going to the product page on SuperMicro’s site and clicking “See Parts List”. [2019 Update:] For the shelf, I picked up a SAS3 expander backplane so I could attach all the drives via a single cable. BPN-SAS3-846EL1 is the model number.

Boot Device: Intel 540s 120GB SSD – This is the cheapest SATA Intel SSD I could find. People typically use USB drives for their boot device, but for a build like this, the gurus recommend using SSDs for increased system reliability. The controllers on most USB drives are pretty unreliable, so it was a worthwhile upgrade for me.

VM SSD/NVMe Adapter: Samsung 960 Pro and StarTech PCIe card [2017 Update] – When I first built this server, all my VMs were running directly on the main storage pool. When there was a lot of disk I/O going on from the network share or some other service (scrubs, SMART tests, etc.), VM performance would drop to a crawl. My solution was to pick up an NVMe SSD and migrate all the VMs to that. My motherboard doesn't have an M.2 slot, so I picked up a cheap StarTech PCIe card and it seems to work pretty well. Migrating the VMs basically involved taking a snapshot of the bhyve dataset in ZFS and restoring it to a new pool I created with the NVMe drive. I had to tinker around with some settings in iohyve to make sure everything was pointed to the right place, but it ended up being a fairly painless process. VM performance after the change improved significantly. [2020 Update:] I had been getting extremely frusterated with bhyve over the past 4 years and finally hit a breaking point. I put together a Proxmox server and moved all my VMs off the FreeNAS system. I'm planning on doing a writeup on the Proxmox build at some point, so stay tuned for that!

CPU Cooler: Noctua NH-U9DXi4 – This is a basic server-grade CPU cooler from the much-respected Noctua. I was initially nervous about its fit with my motherboard and chassis, but it ended up working out pretty well. While it does provide enough vertical clearance for DIMMs installed in the RAM slots closest to the CPU socket (at least with these Samsung DIMMs), it’s so close that I’ll probably have to remove the cooler to actually perform the installation in those slots. You can sort of see what I mean here (same case exists on the other side); notice the RAM slot just under the edge of the cooler in the pictures here and here.

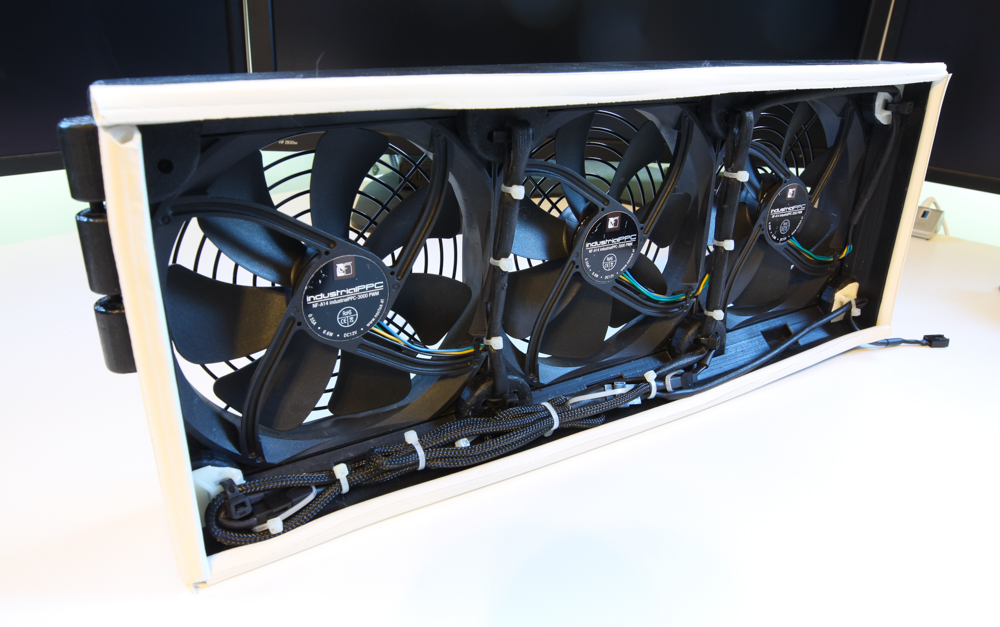

Front Fan Shroud and 140mm Fans: Custom 3D print and Noctua NF-A14 iPPC 3000 PWM [2017 update] – Keeping the drives cool (between 30 and 40 degrees C) while maintaining a low noise level ended up being more of a challenge than I expected. With my original configuration, fans would sit at 2000+ RPM most of the time and produced more noise than I was willing to deal with. I ended up taking fairly drastic measures to resolve this. I designed a custom fan shroud in Sketchup that allows me to mount 3x 140mm fans blowing air into the drive bays from the outside. The fans are powered by a cable I ran through a vent hole on the side of the case. I have much more information on this fan shroud in sections below. [2019 Update:] I made a second fan shroud to go on the expansion shelf.

HDD Fans: Noctua NF-F12 iPPC 3000 PWM – The SC846 comes with 3 80mm HDD fans (which are absurdly loud) mounted to a metal “fan wall”. Fortunately, the fan wall is removable and 3x 120mm fans fit perfectly in its place. I zip-tied the 120mm fans together and used tape-down zip tie mounts to secure them to the chassis. I started with Noctua NF-F12 1500 RPM fans, but some of the drives were getting a bit hot under heavy load, so I switched to their 3000 RPM model. I have pictures of the fan wall install process and more information in the section below. [2019 Update:] I got another set of these fans for the expansion shelf.

Rear Fans: Noctua NF-R8 PWM – As I mentioned above, the stock chassis fans are super loud. These Noctua 80mm fans fit perfectly in their place. I was able to use the hot-swap fan caddies that came with the chassis, but I have it bypassing the hot-swap plug mechanism that SuperMicro built in. [2019 Update:] I got another set of these fans for the expansion shelf.

Uninterruptable Power Supply (UPS): APC SUA1500RM2U Smart-UPS – I got this from eBay, used chassis with a new battery. The total load capacity is 980W and with the server and all my network gear on it, it sits around 25-30% load. It’s working really well for and FreeNAS comes with drivers for it, so I can monitor all sorts of stats and have it shut down the server automatically on longer power outages. [2019 Update:] I picked up another UPS to handle the increased power load from the shelf. When I originally had both the shelf and the head unit on the single UPS, it would drain it in ~30 seconds upon a power outage. I thought maybe its batteries were dead, so I replaced those, but the runtime only increased to ~90 seconds. With the second UPS, I have one power supply from the head and the shelf connected to each UPS. The total runtime is now close to 15 minutes, which is fantastic (and somehow doesn't make sense considering it was only ~90 seconds on a single PSU, but I'll take it).

SSD Cage: SuperMicro MCP-220-84603-0N – This was $25 shipped on eBay and is probably the best way to secure a pair of 2.5” drives inside the chassis other than of double-sided tape or zip-ties.

VM iSCSI SSD: Micron MX500 2 TB [2018 Update] – I added 2 large SATA SSDs to my system in place of the 8TB scratch disk. These disks are striped together in another pool and shared out via iSCSI to one of my high-transaction VMs (which is now performing MUCH better now that it's off the spinning disks). I have that VM resharing the mounted iSCSI volume via SMB so I can access it from my Windows desktop.

Rack Cabinet: Dell 42U Rack Cabinet [2017 Update] – I recently picked up a 42U rack from Craigslist to replace the Ikea coffee table I was using as a temporary solution. Obviously, it's much easier to work on machines in a proper rack, but I realize these aren't a practical solution for everyone. With that in mind, I will leave the information on the Ikea coffee table rack in the article in hopes that someone will find it useful. [Original text:] I’m using a Lack Coffee Table from IKEA with some reinforcement on the lower shelf to serve as a rack for the server and UPS. The LackRack is only temporary, but for $25 it’s done remarkably well. I have metal corner braces on each leg to provide extra support to the lower shelf and a 2x4 piece propping up bottom of the lower shelf in the middle. I have some more notes on the Lack Rack assembly process in the section below.

L2ARC Drive: Intel Optane 900P 280GB AIC [2019 Update] – I added this drive originally as a SLOG but as my iSCSI use dropped off, I switched it to L2ARC duty. I'm not sure it's getting that much use as an L2ARC so I may switch it to my workstation. I bought the drive new on Amazon for $270, but it comes with a code for a ship in the on-line game Star Citizen. I was able to sell this code on eBay for $137, bringing the effective cost of the card down to $151, which is truly an incredible price.

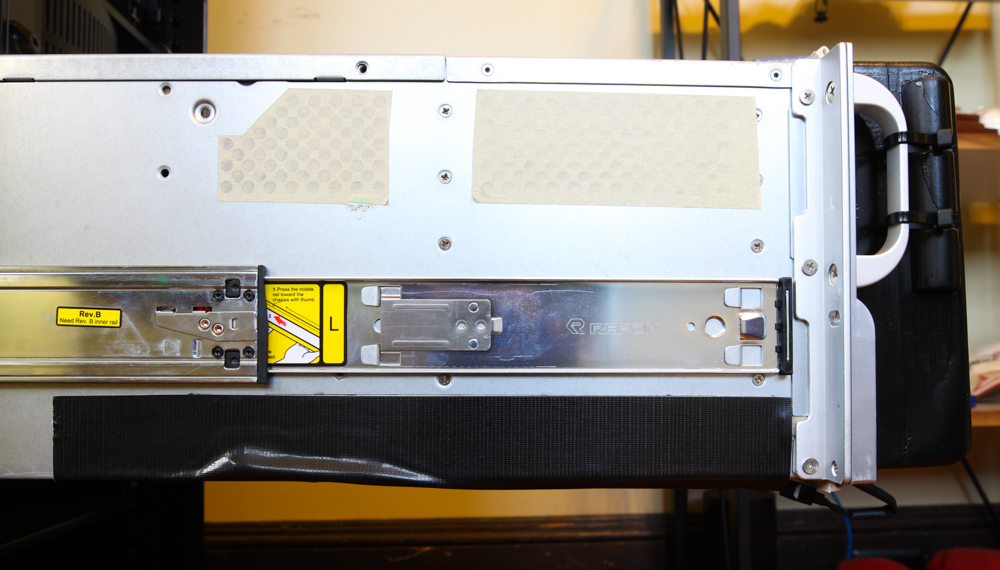

Server Rails: Supermicro MCP-290-00057-0N [2017 Update] – Along with the rack cabinet, I picked up a set of SuperMicro rails for the chassis. They were amazingly easy to install and make the machine so much easier to work on. [2019 Update:] I got another set of rails for the expansion.

Cable Management Arms: SuperMicro MCP-290-00073-0N [2019 Update] – These arms aren't technically made for the 846 chassis, but I was able to cut down one of the brackets a bit and make it work. I've got some photos of this in the section below.

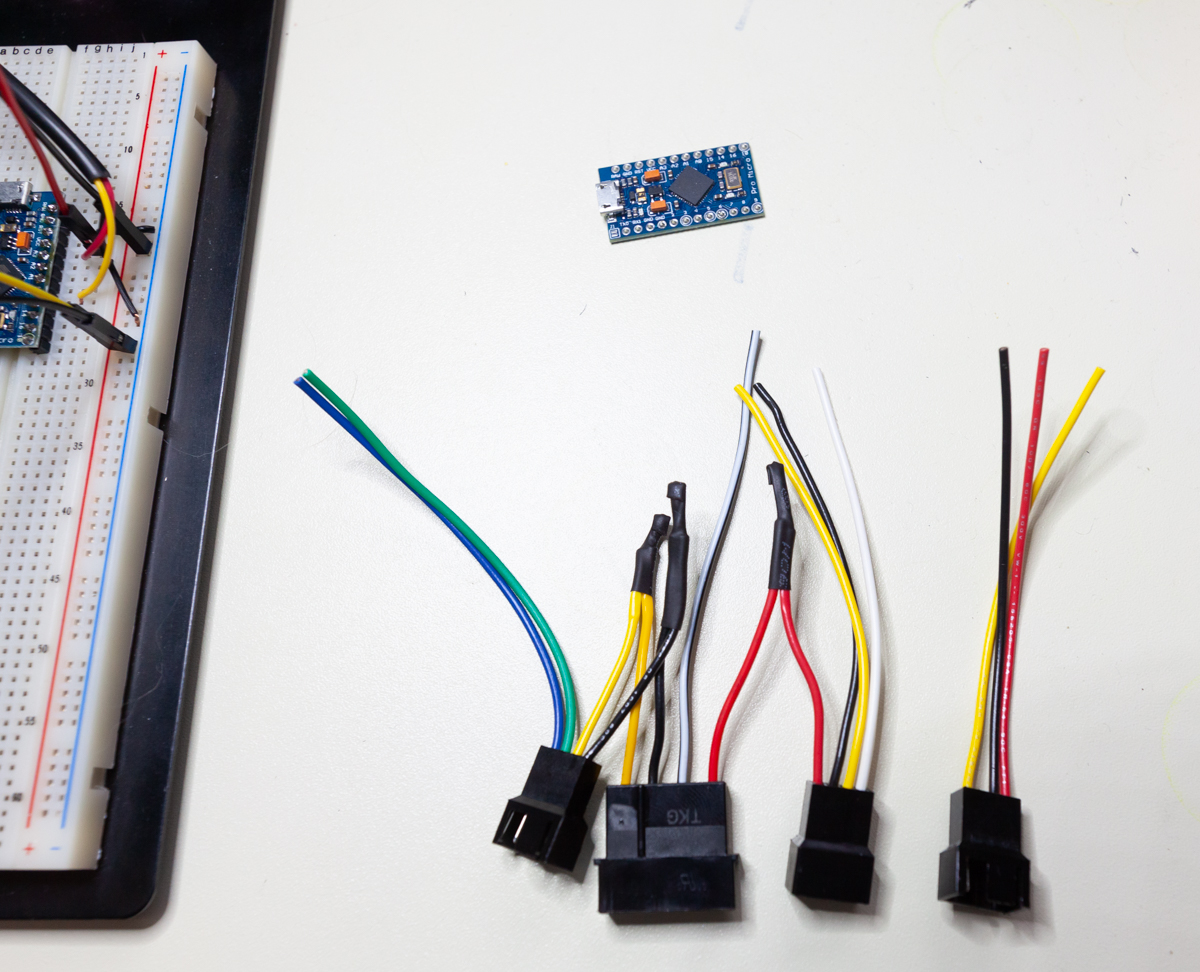

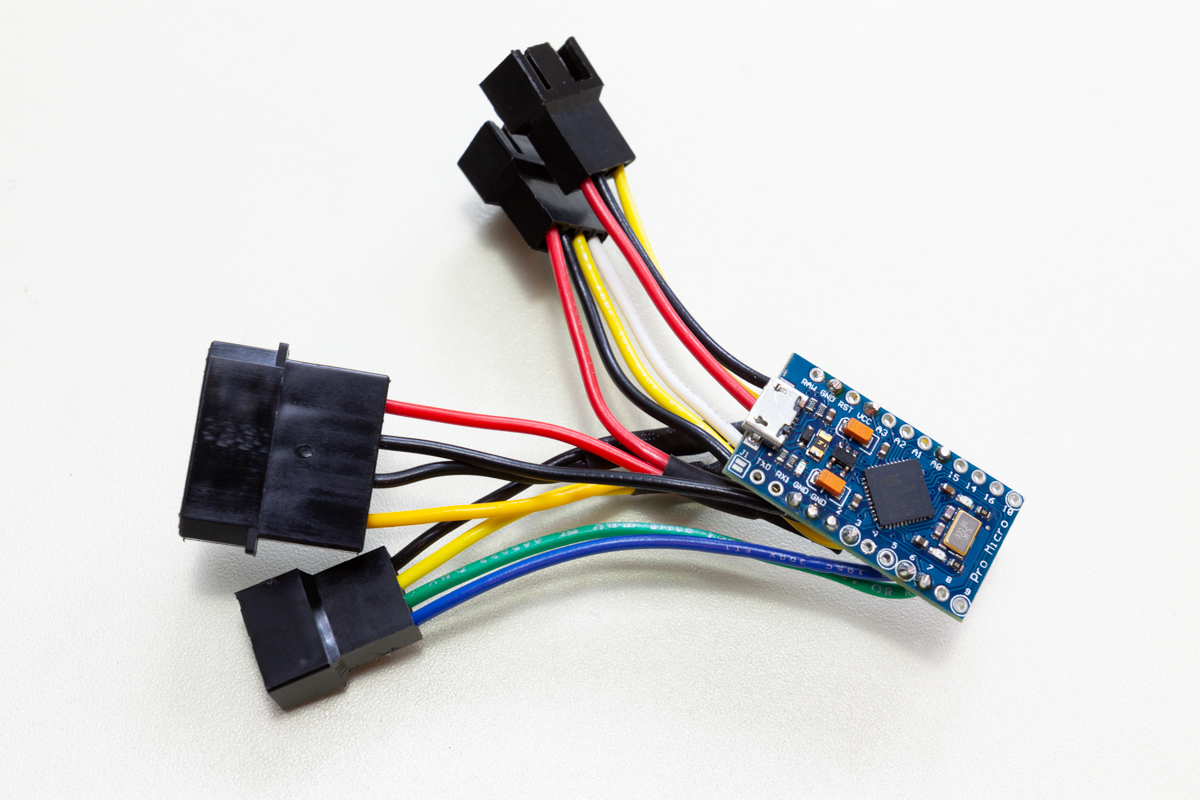

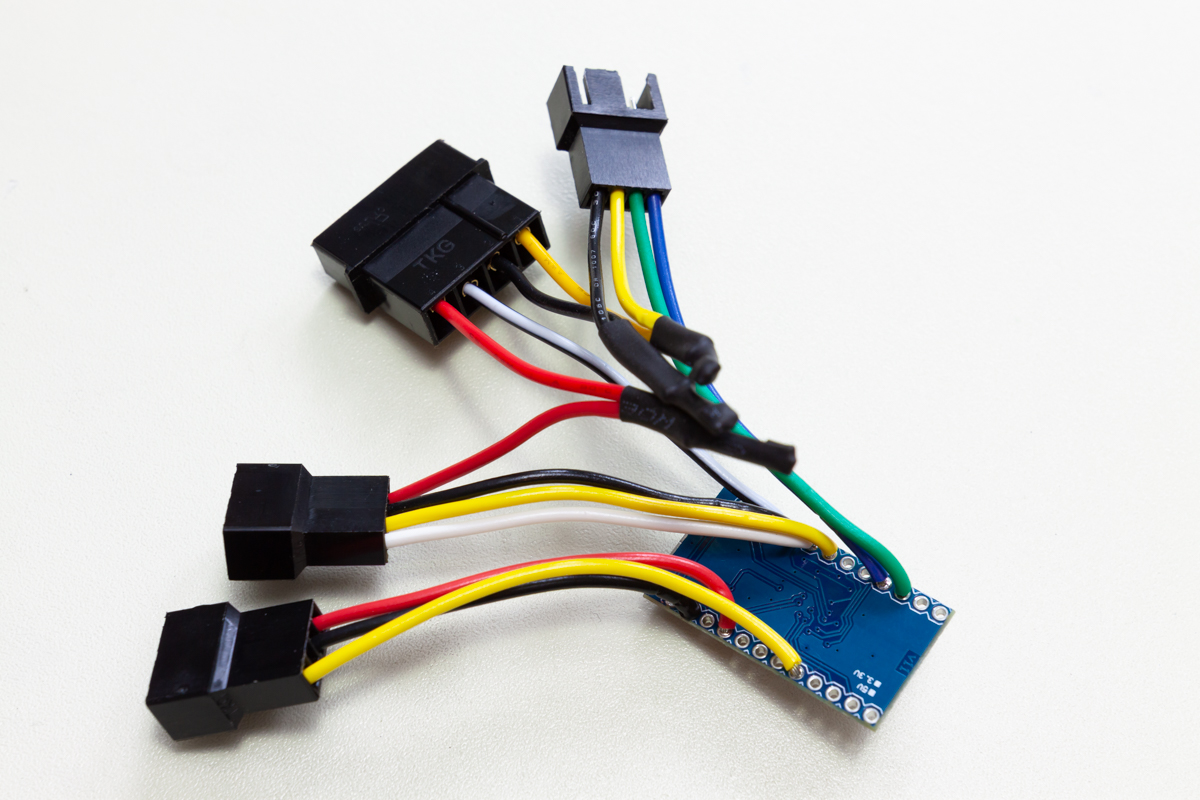

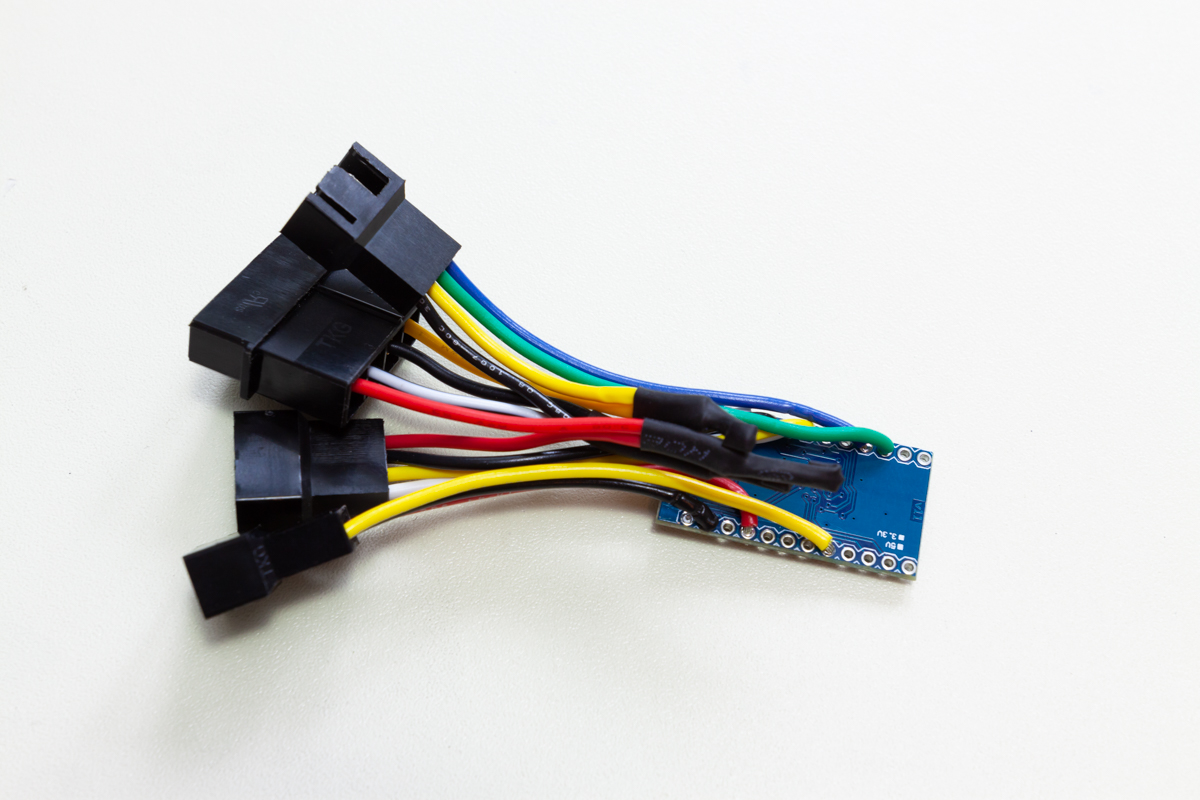

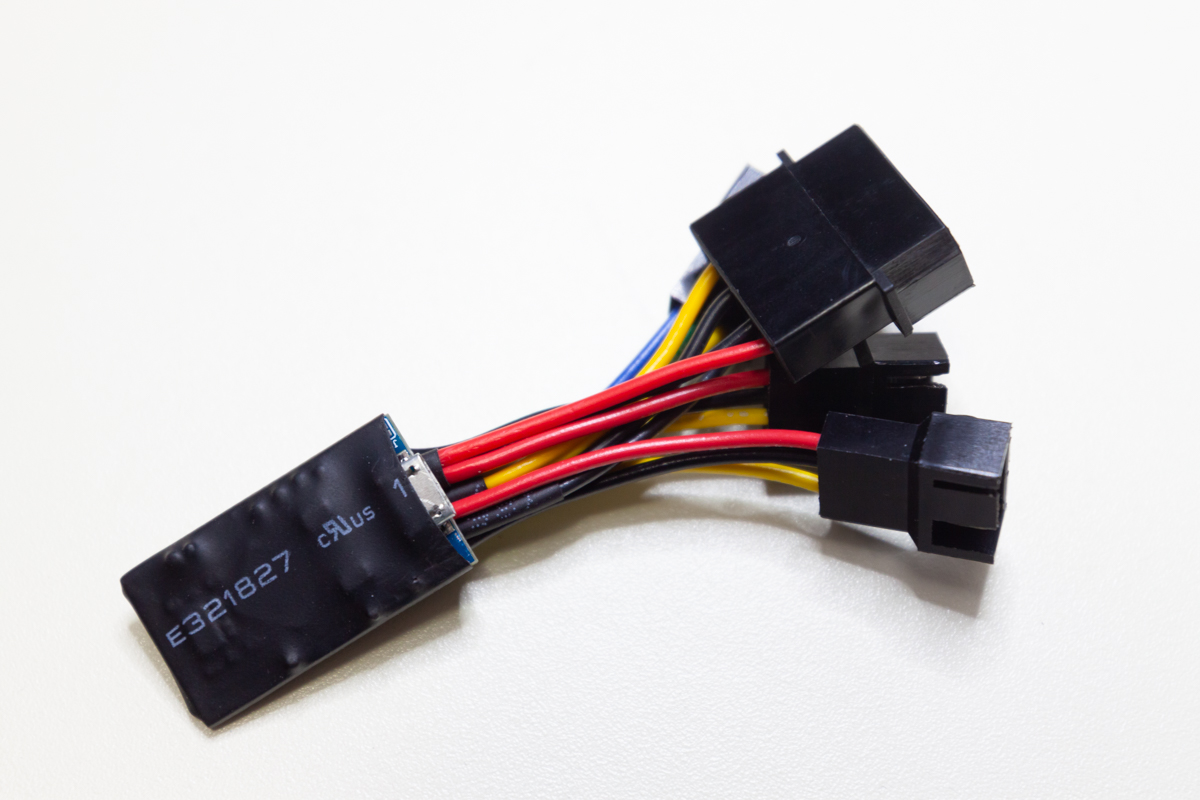

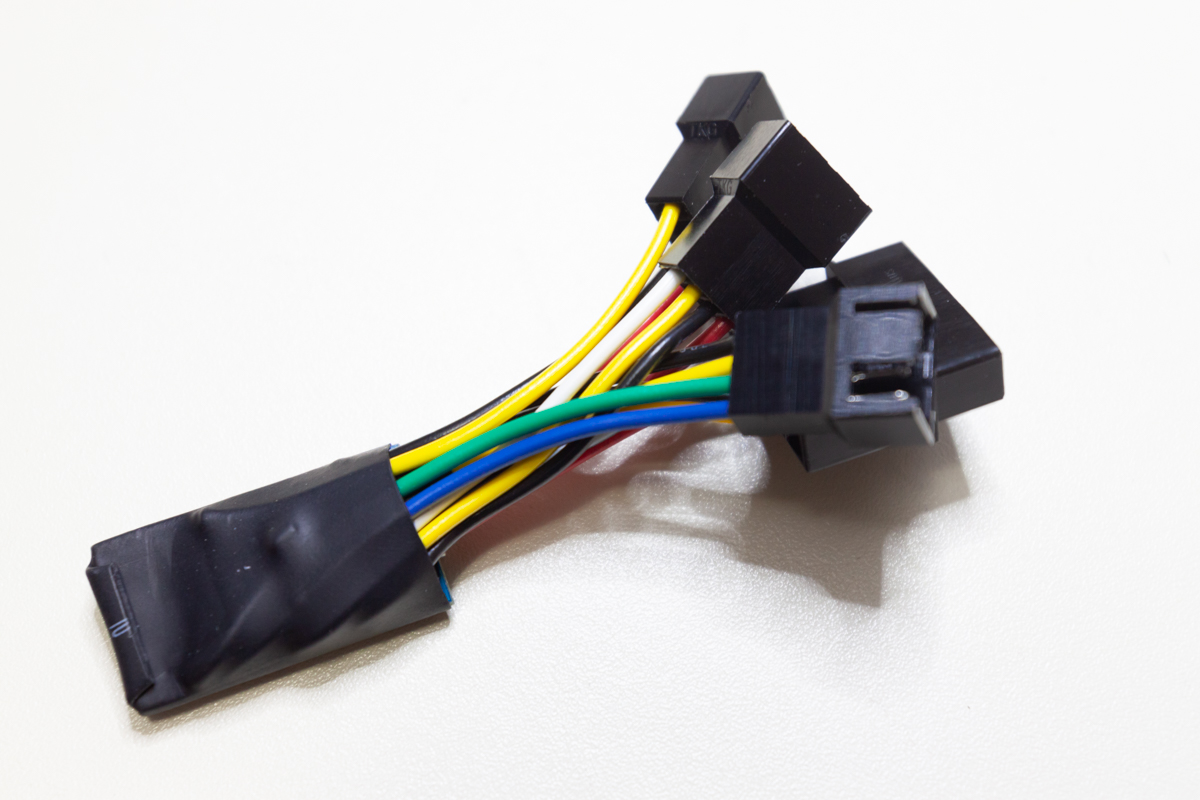

Fan Control System: Raspberry Pis & 11" Touchscreen [2019 Update] – I had to do a massive overhaul on the fan control setup to be able to support independent cooling for my expansion shelf. After lots of trial and error, I ended up using Raspberry Pis to control the fans as well as to host a web server to display system vitals on a small touchscreen. Lots of detail on this in the section below.

Misc: The chassis did not come with HDD screws, so I got a baggie from Amazon for a few dollars (make sure the ones you get will fit flush in the drive cages, otherwise you won’t be able to insert your drives). I picked up the SAS cables from Monoprice via Amazon. I got a 3-to-1 PWM fan splitter so I could attach all the HDD fans to the FANA header on the motherboard (more on this below). I also used a ton of zip ties and some tape-down zip-tie mounts to make the cables all nice and tidy. [2017 Update:] With the front fan shroud, I needed a couple PWM extension cables and splitters as well as 140mm fan guards. I also installed a small 4 CFM blower fan to cool the scratch disk; more info on that below. [2019 Update:] I had to replace a bunch of the SAS cables with the HBA upgrade. I also swapped out lots of the fan cables with custom-made replacements that use heavier gauge wiring. The fan splitters and extension cables I was using before were limiting the maximum fan speed of all my 120mm and 140mm fans.

I’m very happy with the parts selection and I don’t think I would change anything if I had to do it again. I have a few future upgrades in mind, including a proper rack and rails (Update: Done!), getting another M1015 and filling the filling the 8 empty HDD bays (Update: Also done!), installing 10GbE networking (Update: Done as well!), and replacing the 4TB drives with 8TB drives, (Update: Did this too!) but the current setup will probably hold me for a while (Update: It's already like 75% full...).

Build Process

For the most part, the system build was pretty similar to a standard desktop computer build. The only non-standard steps I took were around the HDD fan wall modification, which I discussed briefly in the section above. The stock fan wall removal was pretty easy, but some of the screws securing it are hidden under the hot swap fan caddies, so I had to remove those first. With the fan wall structure out of the way, there were only two minor obstructions left – the chassis intrusion detection switch and a small metal tab near the PSUs that the fan wall screwed in to. The intrusion detection switch was easily removable by a pair of screws and I cut the small metal tab off with a Dremel (but you could probably just bend it out of the way if you wanted to). With those gone, the chassis was ready for my 120mm fan wall, but because the fans would block easy access to the backplane once they’re installed, I waited until the very end of the build to install them.

With the fan wall gone, swapping out the EOL backplane (which came pre-installed in my chassis) for the new version I purchased was pretty easy. Some of the screws are a little tough to access (especially the bottom one closest to the PSUs), but they all came out easily enough with some persistence. There are 6x Molex 4-pin power connectors that plug into the backplane to provide power to all the drives. The SuperMicro backplanes have a ton of jumpers and connectors for stuff like I2C connectors, activity LEDs, and PWM fans, but I didn’t use any of those. Drive activity information is carried over the SAS cable and all my fans are connected directly to the motherboard. If you’re interested, check the backplane manual on the SuperMicro website for more information on all the jumpers and connectors.

After I swapped out the backplane, the motherboard, CPU, RAM, CPU cooler, PSUs, SSDs, and HBA cards all went in like a standard computer build. The only noteworthy thing about this phase of the installation was the orange sticker over the motherboard’s 8 pin power connector that reads “Both 8pins required for heavy load configuration”. It’s noteworthy because there is only one 8 pin power connector on the board... Maybe they meant the 8 pin and 24 pin power connectors? Whatever the case may be, just make sure both the 8 pin power and 24 pin power connectors are attached and you’ll be fine. I also made note of the SAS addresses listed on the back of each of the M1015 cards before installing them. The SAS address is printed on a sticker on the back of the card and should start with “500605B”, then there will be a large blank space followed by 9 alpha-numeric characters interspersed with a couple of dashes. These addresses are needed in the initial system configuration process.

[2018 Update:] I ended up removing this scratch disk and replacing it with a pair of SATA SSDs. [2017 Update:] I wanted an extra drive to host a ZFS pool outside the main volume. This pool would just be a single disk and would be for data that didn't need to be stored with redundancy on the main pool. This drive is mounted to a tray that sits right up against the back of the power supplies on the inside of the chassis and it tended to get much hotter than all the front-mounted drives. My fan control script goes off of maximum drive temperature, so this scratch disk kept the fans running faster than they would otherwise. To help keep this drive cool, I drilled new holes in the drive tray to give a bit of space between the back of the drive and the chassis wall. I also cut a small hole in the side of the drive tray and mounted a little blower fan blowing into the hole so that air would circulating behind the drive. I had to cut away a portion of the SSD mounting tray to accommodate the blower fan. In the end, I'm not sure if the blower fan with its whopping 4 CFM of airflow makes any difference, but it was a pain to get in there so I'm leaving it. In fact, I ended up just modifying the fan script to ignore this scratch disk, but I do keep an eye on its temperature to make sure it's not burning up. A picture of the blower fan is below:

As this was my first server build, I was a little surprised that unlike consumer computer equipment, server equipment doesn’t come with any of the required screws, motherboard standoffs, etc., that I needed to mount everything. Make sure you order some extras or have some on-hand. I ordered a 100-pack of SuperMicro HDD tray screws on Amazon for $6 shipped; I would recommend using these screws over generic ones because if you use screws that don’t sit flush with the HDD sled rails, you’ll have a lot of trouble getting the sled back in the chassis and could even damage the chassis backplane.

As I mentioned above, the CPU cooler I’m using provides enough vertical clearance for the RAM, but I will probably have to remove the cooler to actually get the RAM into the slot if I ever need to add RAM. This isn’t a huge deal as the cooler is really very easy to install. I will note here that the cooler came with 2 different sets of mounting brackets for the LGA2011-v3 narrow ILM system so you can orient the airflow direction either front-to-back or side-to-side (allowing you to rotate the cooler in 90 degree increments). Obviously, for this system, I wanted air flowing in from the HDD side and out the back side, so I had to use the appropriate mounting bracket (or, more accurately, I realized there were two sets of narrow ILM brackets only after I installed the incorrect set on the cooler).

The front panel connector was a little confusing as the non-maskable interrupt (NMI) button header is in the same assembly on the motherboard as all the front panel headers (this header assembly is marked “JF1” on the motherboard and is not very clearly described in the manual). The connectors for all the front panel controls and LEDs are also contained in one single plug with 16 holes and no discernible orientation markings. After studying the diagrams in the motherboard manual, I was able to determine that the NMI button header pins are the 2 pins on this JF1 assembly that are closest to the edge of the motherboard, then (moving inwards) there are 2 blank spots, and then the 16 pins for the rest of the front panel controls and LEDs. The 16 pin front panel connector plugs into these inner 16 pins and should be oriented so the cable exits the 16 pin connector towards the PSU side of the chassis. Basically, if you have the front panel connector plugged into these 16 pins but the power button isn’t working, try flipping the plug around. If you have an NMI button (not included in the stock chassis), it will plug into those last 2 pins closest to the motherboard’s edge. If you don’t have an NMI button, just leave those pins empty.

I also swapped out the rear fans for quieter Noctua 80mm models at this point. The only way to mount them in the chassis is with the hot swap caddies (the chassis isn’t drilled for directly-mounted fans), but the process is pretty straight-forward. The stock fans have very short cables, maybe 1 inch long, because the PWM connectors are mounted onto the side of the caddie so they can mate with the hot-swap plug on the chassis itself when you slide the caddie into its “rail” system. That plug connects to what is essentially a PWM extension cable mounted to the caddie rails which connects the fans to the motherboard’s PWM fan headers. I took out this whole hotswap mechanism because the Noctua fan cables are much longer than the stock fan cables and the Noctua PWM connectors are missing a small notch on the plug that is needed to secure it in the hot swap caddie. It’s tough to describe, but it would be pretty obvious what I mean if you examine the rear fan caddies yourself.

With all the server guts installed and connected, I did some basic cable management and prepared to install my 120mm fan wall. I started by using zip-ties to attach the 3 fans together (obviously ensuring they would all blow in the same direction). The Noctua fans have soft silicone pads in each corner, so vibrations shouldn’t be a big issue if you get the pads lined up right. I put the fan wall in place in the chassis and marked off where the zip tie mounts should be placed with a marker, stuck the mounts on the marks (4 in total on the bottom), and used more zip ties to mount the fan wall in place. With the bottom of the fan wall secured in place, the whole thing is pretty solid, but I added one more zip tie mount to the top of the wall on the PSU side. This sort of wedges the fan wall in place and makes it all feel very secure. Once the fans were secure, I connected them to the 3-to-1 PWM fan splitter, attached that to the FANA header (this is important for the fan control script discussed later), and cleaned up all the cables.

[2019 Update:] The center fan wall and the front fans discussed below are no longer connected to the motherboard. With the modifications I did to the fan control setup, these fans are connected directly to an independent Raspberry Pi system that generates the PWM signals based on commands received from a script on the FreeNAS itself. Power for the fans is provided by a +12V line spliced in from the PSU.

While I’m talking about the HDD fan wall, I’ll also mention here that after running the server for a few days, I noticed some of the drive temperatures were in the low 40s (Celsius), much higher than they should be. The Noctua fans I originally had installed maxed out at 1500 RPMs, but I decided I would be safer with the Noctua iPPC fans that could hit 3000 RPM. I have a fan control script running (more on that below), so they hardly ever need to spin faster than 1500 RPM, but it’s nice to know the cooling is there if I ever need it. In addition to upgrading my original fans, I made a few minor modifications to improve overall cooling efficiency for the whole system:

I used masking tape to cover the ventilation holes on the side of the chassis. These holes are on the hard drive side of the fan wall and are intended to prevent the stock fans from starving, but with lower speed fans they allow air to bypass the hard drives which cuts the total cooling efficiency.

I cut pieces of index cards and used masking tape to block air from flowing through the empty drive bays. The air flow resistance through the empty bays was much lower than it was through the populated bays so most of the air was bypassing the hard drives. You can see a picture of it here. [2017 Update:] These bays are now populated with drives, so the index cards and masking tape came off!

Air was flowing from the CPU side of the HDD fan wall back over the top of the fans rather than coming through the HDD trays, so I cut a long ~3/4” thick strip of wood to block the space between the top of the fans and the chassis lid. I measured the wood strip to be a very tight fit and zip-tied it to the fans to secure it in place. I even cut out little divots where the zip ties cross the top of the wood strip to be extra cautious. You can see this wood strip in the 3rd and 4th pictures in the section above.

[2017 Update:] The fans started to get noisy in the summer when ambient temperatures went up, so I took more drastic measures. I designed a bezel that fits over the front part of the chassis and allows me to mount 3x 140mm fans blowing air into the drive bays from the outside. The bezel is secured in place with zip ties and powered via a PWM extension cable that I ran through one of the side vent holes and along the outside of the chassis. This fan bezel has had a substantial improvement in overall airflow and noise level. More information on it just below.

With these simple modifications in place, effective airflow to the drives increased dramatically and HDD temps dropped by ~10C even with fan speeds under 1500 RPM. You can check relative airflow levels to the hard drive bays by holding a piece of paper up in front of the drive bays and observing the suction force from the incoming air. With a heavy workload, the fans sometimes spin up to 2000 RPM for a minute or two, but overall the system is very quiet. The fan control script I’m running is set to spin up when any drive gets above 36C.

[2017 Update:] I built this machine in the fall, and through the colder winter months, the cooling I had in place was able to keep up with the heat output without making too much noise. When summer rolled around, however, the fans started to get annoyingly loud. I eventually decided to design a fan shroud for the front of the server. It would allow me to mount 3x 140mm fans in front of the drive bays blowing inward. I had the part 3D printed via 3DHubs in PLA (10um layer size) and it turned out pretty nice. There's a link to the 3D model of the bezel below. After a lot of sanding, priming, painting, and some light bondo application, I ended up with piece below:

[2025 Update:] I now have several of these fan shrouds finished (sanded, painted, fans and guards installed) available for sale. I can also 3D print a fresh shroud if you would prefer to finish it yourself. If you're interested, please get in touch: jason@jro.io

The 20d nail run through these knobs allows for more secure mounting.

The shroud is zip-tied to the chassis handles. Also note the weather stripping.

The PWM extension cable is run out of one of the side vent holes and along the bottom of the chassis (covered with black tape).

[2017 Update ctd.] The 3D model for the fan bezel can be found on Sketchfab. You should be able to download the STL file on that same page. There are a few things to note about the model for anyone that wants to try something similar:

The assembly is designed to be mounted to the chassis with zip ties that loop between the chassis handles and a 20d nail inserted in the holes on the "knob" things on the sides of the bezel. I cut the heads and the points off of a couple 20d nails (leaving a straight metal rod), put them in the holes in the knobs and glued them in place with CA model glue. This lets you run the zip ties through a metal piece and secure everything far more tightly than you would be able to with plastic-only.

The fans are powered via a PWM extension cable that I threaded through a vent hole in the side of the chassis and ran along the bottom. I had to disconnect the plug on one end of the cable, but this is pretty easy to do with a small screwdriver. I used a 3-way PWM splitter to connect all the fans to this single extension cable.

The fans are obviously oriented to blow inward, so the "back" or suction side of the fans is facing out towards the room. This side of the fan doesn't have any sort of guard built into it, so I used some wire fan guards to ensure fingers and dog noses don't get nipped.

The "knobs" on the inside of the part are designed to let you zip tie fans in place. The 4 knobs in the corners have a small hole for the zip tie to be threaded through, but these came out unusably small in my print. I ended up securing the fans in place using only the 4 inner knobs and glued the fan guards down in the 4 corners. If you would prefer to use secure the fans and fan guards with screws, you may need to resize the screw holes as they're slightly larger than standard fan holes.

I designed 3 cutouts on the inside bottom of the bezel for tape-down zip tie mounts. I ended up not using these because the zip tie mounts prevented the bottom of the bezel from contacting the chassis; the zip tie mounts hit the drive trays, which protrude from the front of the server quite a bit.

There is a small cutout in the bottom left corner for the PWM cable to exit the bezel. If you run a PWM extension cable down the right side of your chassis, you'll want to relocate this cutout.

If you have your server installed in a rack with a front door, it's worth checking that the bezel won't protrude so far forward that it blocks the door from shutting. I ended up with maybe 5mm of clearance with the weather stripping installed.

The bezel didn't make a very tight seal with the chassis, so a lot of air was escaping around the edges. I got some weather stripping tape from the hardware store, applied a strip around the bezel and another around the chassis and it fixed this problem nicely. It also provided a bit more clearance for the cable bundle on the inside of the bezel which was jammed up against the front of the drive trays before.

[2017 Update ctd.] The fan bezel has had a significant impact on overall cooling performance and noise level. Without the bezel, the internal 120mm fans would need to run at 2000+ RPM almost constantly during the summer months. Now that the bezel is installed, I can keep the fans at 1200-1300 RPM and all the drives are just as cool as before.

[2017 Update ctd.] I made a short video that covers the various changes I made to my chassis' cooling system and demonstrates the noise level at various fan speeds:

The last step in the system build was to get all the hard drives loaded into their sleds and slide them into the chassis. If you aren’t populating all 24 bays in the chassis, be sure to note which mini-SAS ports connect to which bays; this is labeled on the rear of the backplane and in the backplane manual.

[2017 Update:] With everything built, I could load the server and the UPS into the rack cabinet. The inner rails snapped right into place on the sides of the chassis and the outter rails slotted directly into the square holes on the rack posts. I originally had the outer rails installed a third of a rack unit too low, so I had to move them up a slot. If you're unsure of which set of holes to use for the outter rails so your machine lines up with the marked rack units, check the photos above of my machine in the rack. The UPS is just sitting on the floor of the rack cabinet (which solid steel and seems extremely study) and occupies the lowest 2U.

[Original text with details on building the LackRack:] With everything built, I could load the server and the UPS into the LackRack. The UPS went on the floor and the server went on the lower shelf. I have all my networking gear on the top shelf along with some other knick-knacks. Assembly of the LackRack itself was pretty easy, but there were a few minor things worth noting. I picked up some basic metal corner braces from a hardware store for reinforcement of the lower shelf; they’re around 3” long and 3/4” wide and seem to work pretty well. I mounted the braces to the legs of the table and the underside of the lower shelf with basic wood screws. The lower shelf is only ~1/3” thick, so I got very stubby screws for that side of the brace. When measuring how low or high to install the lower shelf, I forgot to make sure leave enough room for the server to sit in the space and had to re-do part of the installation at a lower height. For a 4U server (like the one I’ve got), you’ll need a smidge over 7”, so the shelf has to go an inch or two lower than the IKEA instructions would have you mount it. The legs of the table (into which I mounted the braces) are very light weight; it feels like they’re totally hollow except for a small solid area around the middle where you’re supposed to mount the tiny IKEA-provided braces that come with the table. Don’t over-tighten the screws you put into the legs even a little bit, otherwise it will completely shred out the wood and won’t be very secure. In fact, while I was installing one of the braces, I leaned on my screw gun a bit too hard and before I even started to turn the screw, it broke through the outer “wall” of the leg and just went chonk and fell down into place. Not a confidence-inspiring event while building the “rack” that will soon house my ~$5,000 server... Regardless, with all the corner braces installed, the two shorter ends of the shelf seem pretty sturdy. However, the shelf is so thin that it would have started to sag (and could have possibly broken) with any weight in the middle. With a file server, most of the weight is in the front due to the drives, but I thought it was still a good idea to brace the middle of the shelf from the underside. I cut a short piece of 2x4 that I could use to prop up the middle of the lower shelf from underneath.

With everything installed and mounted, I was finally ready to power on the system for the first time and move on to the installation and configuration process!

Flashing the M1015 HBA Cards & Installing FreeNAS

[2019 Update:] The two new LSI HBAs I bought to replace the M1015's do not require any crossflashing or reflashing (unless the firmware is out of date). The process below is for the IBM cards which are just re-branded LSI 9211-8is. The crossflashing operation with the MEGAREC utility lets you erase the IBM firmware and put LSI's firmware back on them. Again, with the newer LSI cards I got, this isn't necessary because they're already running LSI's IT firmware out of the box.

I was pretty lucky and my server POST’d on the first try. Before actually installing an OS, I needed to flash the M1015 cards with the IT mode firmware. This article has instructions on that process. The download linked in that article goes down quite a bit, so I’ve rehosted the necessary firmware files here [.zip file]. This file contains 3 DOS applications (sas2flsh.exe, megarec.exe, and dos4gw.exe), the IT firmware image (2118it.bin), the BIOS image file (mptsas2.rom), and an empty file for backing up stock card firmware (sbrempty.bin). If you're flashing more than one card, you will want to copy this file so you have one per card. The sas2flsh and megarec applications are used below to back up, erase, and reflash the cards. The dos4gw application allows these applications to address more memory space than they would be able to otherwise, but you won't need to run it directly.

I used Rufus to create a bootable FreeDOS USB drive and copied in the files from the above .ZIP archive. Before performing the rest of the process, it is a good idea to visit the controller manufacturer’s website to make sure you’re using the most recent firmware image and BIOS. They change the layout and URL of the official Broadcom website that hosts the firmware, so just search Google for “SAS 9211-8i firmware”, find the downloads section, and open the firmware sub-section. The versions are marked by “phase” numbers; the firmware/BIOS version I included in the above ZIP file is from phase 20 or “P20” as it’s listed on the site. If a more recent version is available, download the MSDOS and Windows ZIP file, find the BIOS image (called mptsas2.rom) and the IT firmware (called 2118it.bin; you do not want the IR firmware called 2118ir.bin) and copy them both onto your bootable USB drive overwriting the files I provided.

With the SAS addresses I wrote down during the build process on hand, I booted from my USB drive into the FreeDOS shell and executed the following from the DOS terminal:

megarec -adpList

MegaREC is a utility provided by LSI that I'll use to back up each card's original firmware and then wipe them. The above command lists all the adapters it finds; make sure all your cards are listed in its output. When I originally flashed my cards, I had two installed, so I run each command once per card with the adapter number after the first flag. I made two copies of the sbrempty.bin file, called sbrempty0.bin and sbrempty1.bin; make sure to adjust your -writesbr commands accordingly. If you only have one card, you can omit the adapter number. Run the following commands to back up and wipe each of the cards:

megarec -writesbr 0 sbrempty0.bin

megarec -writesbr 1 sbrempty1.bin

megarec -cleanflash 0

megarec -cleanflash 1

(Reboot back to USB drive.)

Once I backed up and wiped all the cards, I rebooted the server. When it came online (again in FreeDOS), I could flash the cards with the IT mode firmware using the following commands:

sas2flsh -o -f 2118it.bin -c 0

sas2flsh -o -f 2118it.bin -c 1

sas2flsh -o -sasadd 500605bXXXXXXXXX -c 0

sas2flsh -o -sasadd 500605bXXXXXXXXX -c 1

(Shut down and remove USB drive.)

There are a couple of things to note here. As above, the -c 0 and -c 1 at the end of these commands specify the controller number. If you’re also following the guide I linked above, you may notice that I’ve left out the flag to flash a BIOS (-b mptsas2.rom) in the first set of commands. This is because I don’t need a BIOS on these cards for my purposes; you will need the BIOS if you want to boot from any of the drives attached to the controller (but don’t do that... Either use USB drives or connect your SSDs directly to the motherboard SATA ports). I’ve included the latest BIOS file in the zip just in case someone needs it; just add -b mptsas2.rom to the end of the first (set of) command(s), but again, you really shouldn’t need it. The last thing to note is the SAS addresses in the second set of commands. The XXXXXXXXX part should be replaced with last part of the SAS address of that controller (without the dashes). Make sure the address matches up with the correct card; you can run sas2flsh -listall to check the PCI addresses if you aren’t sure which controller number maps to which physical card. The -listall command requires firmware to be flash to the card or else it will throw an error and prompt for the firmware filename, so run it after the -f commands. After all the cards were flashed, I powered down the server, removed the USB drive, and prepared to install FreeNAS.

I downloaded the latest FreeNAS 9.10 ISO from here, used Rufus again to make a bootable USB drive with it, and started the install process by booting off the USB stick. The FreeNAS installation process in very easy. When selecting the boot volume, I checked off both my SSDs and FreeNAS handled the mirroring automatically. After the installation finished, I rebooted the system from the SSDs and the FreeNAS web UI came online a few minutes later.

Initial FreeNAS Configuration

The very first thing I did in the FreeNAS configuration is change the root password and enable SSH. I also created a group and user for myself (leaving the home directory blank to start with) so I didn’t have to do everything as root. If you’re having trouble getting in via SSH, make sure the SSH service is actually enabled; in the web UI, go to Services > Control Services and click the SSH slider to turn the service on.

With SSH access set up, I connected to a terminal session with my new FreeNAS machine and followed this guide on the FreeNAS forums for most of my initial setup, with a few minor modifications. The text in this section is largely based off that guide. My first step is to determine the device names for all the installed disks. You can do this by running:

camcontrol devlist

After determining the device names, I did a short SMART test on each of my drives using:

smartctl -t short /dev/da<#>

Where da<#> is the device name from the camcontrol devlist output. The test only takes a couple minutes and you can view the results (or the ongoing test progress) using:

smartctl -a /dev/da<#>

After checking that all the SMART tests passed, I created my primary volume. My process was a little non-standard because I moved my 4TB drives into the server after I transferred the data off them, so I’ll go through my process first and discuss the standard process afterwards. However, before diving into that, I want to review how ZFS allocates disk space and how it can be tuned to minimize storage overhead (by as much as 10 percent!). This next section gets pretty technical and if you aren’t interested in it, you can skip it for now.

Calculating & Minimizing ZFS Allocation Overhead

Calculating the disk allocation overhead requires some math and an understanding of how ZFS stripes data across your disks when storing files. Before we get into the math, let’s take a look at how ZFS stores data by discussing two examples:

Storing a very small file, and

Storing a large(r) file.

We’ll start out with the small file. Hard disks themselves have a minimum storage unit called a “sector”. Because a sector is the smallest unit of data a hard disk can write in a single operation, any data written to a disk that is smaller than the sector size will still take up the full sector. It's still possible for a drive to perform a write that's smaller than its sector size (for instance, changing a single byte in an already-written sector), but it needs to first read the sector, modify the relevant part of the sector's contents, and then re-write the modified data. Obviously this sequence of three operations will be a lot slower than simply writing a full sector’s worth of data. This read-write-modify cycle is called "write amplification".

On older hard drives (pre ~2010), the user data portion of a sector (the part we care about) is typically 512 bytes wide. Newer drives (post ~2011) use 4096-byte sectors (4KiB, or simply 4K). Each hard disk sector also has some space for header information, error-correcting code (ECC), etc., so the total sector size is actually 577 bytes on older drives and 4211 bytes on newer drives, but we only care about the portion in each sector set aside for user data; when I refer to a “sector”, I’m referring only to the user data portion of that sector.

Because the hard disk sector size represents the smallest possible unit of storage on that disk, it is obviously a very important property for ZFS to keep track of. ZFS keeps track of disk sector sizes through the “alignment shift” or ashift parameter. The ashift parameter is calculated as the base 2 logarithm of a hard disk’s sector size and is set per virtual device (“vdev”). ZFS will attempt to automatically detect the sector size of its drives when you create a vdev; you should always double-check that the ashift value is set accurately on your vdev as some hard disks do not properly report their sector size. For a vdev made up of older disks with 512-byte sectors, the ashift value for that vdev will be 9 (\(2^9 = 512\)). For a vdev made up of newer disks with 4096-byte sectors, the ashift value for that vdev will be 12 (\(2^{12} = 4096\)). Obviously, mixing disks with 512-byte sectors and disks with 4096-byte sectors in a single vdev can cause issues and isn’t recommended; if you set ashift = 9 in a vdev with 4K drives, performance will be greatly degraded as every write will require the read-modify-write operation sequence I mentioned above in order to complete. It follows then that 2^ashift then represents the smallest possible I/O operation that ZFS can make on a given vdev (or at least before we account for parity data added on by RAID-Z).

Let’s quickly review how data is stored on a “striped” RAID configuration (i.e., RAID 5, RAID 6, RAID-Z, RAID-Z2, and RAID-Z3) before going any further. On these RAID configurations, the data stored on the array will be spread across all the disks that make up that array; this is called “striping” because it writes the data in “stripes” across all the disks in the array. You can visualize this with a 2-dimensional array: the columns of the array are the individual disks and the rows are the sectors on those disks (a full row of sectors would then be called a “stripe”).

When you write data to a RAID 5 or RAID 6 system, the RAID controller (be it hardware or software) will write that data across the stripes in the array, using one sector per disk (or column). Obviously, when it hits the end of a row in the array, it will loop back around to the first column of the next row and continue writing the data. RAID 5 and RAID 6 systems can only handle full-stripe writes and will always have 1 parity sector per stripe for RAID 5 and 2 parity sectors per stripe for RAID 6. The parity data is not stored on the same disk(s) in every row otherwise there would be a lot of contention to access that disk. Instead, the parity sectors are staggered, typically in a sort of barber pole fashion, so that when you look at the whole array, each disk has roughly the same number of parity sectors as all the others. Again, this ensures that in the event of a bunch of small writes that should only involve writing to two or three disks, one disk is bogged down handling all the parity data for every one of those writes. Because RAID 5 and 6 can only handle full-stripe writes, if it's told to write data that is smaller than a single stripe (minus the parity sectors), it needs to read the data in that stripe, modify the relevant sectors, recalculate the parity sector(s), and rewrite all sectors in the stripe. Very similar to the write amplification example above, this long sequence of events to handle a single small write ends up hobbling performance.

RAID-Z can handle partial-stripe writes far more gracefully. It simply makes sure that for every block of data written, there are \(p\) parity sectors per stripe of data, where \(p\) is the parity level (1 for Z1, 2 for Z2, and 3 for Z3). Because ZFS can handle partial-stripe writes, ZFS doesn't pay special attention to making sure parity sectors are "barber poled" as in RAID 5 and 6. Lots of small write operations that would cause contention for a single parity disk as above would just get their own pairty blocks in their own partial-stripe write. It should be noted that ZFS stripes the data down the array rather than across it, so if the write data will occupy more than a single stripe, the second sector of the data will be written directly under the first sector (on the next sector in the same disk) rather than directly to the right of it (on a sector on the next disk). It still wraps the data around to the next disk in a similar fashion to RAID 5 and 6, it just does it in a different direction. If the write data fits in a single stripe, it stripes the data across the array in an almost identical manner to RAID 5 and 6. ZFS's vertical RAID-Z stripe orientation doesn't really impact anything we'll discuss below, but it is something to be aware of.

Getting back on track, we were discussing the smallest possible writes one can make to a ZFS array. Small writes will obviously be used for small file sizes (on the order of a couple KiB). The smallest possible write ZFS can make to an array is:

$$ n_{min} = 1+p $$

As above, p is the parity level (1 for RAID-Z1, 2 for RAID–Z2, and 3 for RAID-Z3) and the 1 represents the sector for the data itself. So \(n_{min}\) for various RAID-Z configurations will be as follows:

$$ \text{RAID-Z1: } n_{min} = 2 $$

$$ \text{RAID-Z2: } n_{min} = 3 $$

$$ \text{RAID-Z3: } n_{min} = 4 $$

When ZFS writes to an array, it makes sure the total number of sectors it writes is a multiple of this \(n_{min}\) value defined above. ZFS does this to avoid situations where data gets deleted and it ends up with a space on the disk that’s too small to be used (for example, a 2-sector wide space can’t be used by RAID-Z2 because there’s not enough room for even a single data sector and the necessary two parity sectors). Any sectors not filled by user data or parity information are known as “padding”; the data, parity information, and padding make up the full ZFS block. Padding in ZFS blocks is one of the forms of allocation overhead we’re going to look at more closely. Study the table below for a better idea of how block padding can cause storage efficiency loss. Note that this table assumes everything is written to a single stripe; we’ll look at how data is striped and how striping can cause additional overhead in the next section.

| Data, Parity, and Padding Sectors with Efficiency (Note: Assumes Single Stripe) | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Data Sectors |

Parity Sectors | Padding Sectors | Total Sectors (Block Size) |

Efficiency (Data/Total) |

||||||||

| Z1 | Z2 | Z3 | Z1 | Z2 | Z3 | Z1 | Z2 | Z3 | Z1 | Z2 | Z3 | |

| 1 | 1 | 2 | 3 | 0 | 0 | 0 | 2 | 3 | 4 | 50.0% | 33.3% | 25.0% |

| 2 | 1 | 2 | 3 | 1 | 2 | 3 | 4 | 6 | 8 | 50.0% | 33.3% | 25.0% |

| 3 | 1 | 2 | 3 | 0 | 1 | 2 | 4 | 6 | 8 | 75.0% | 50.0% | 37.5% |

| 4 | 1 | 2 | 3 | 1 | 0 | 1 | 6 | 6 | 8 | 66.7% | 66.7% | 50.0% |

| 5 | 1 | 2 | 3 | 0 | 2 | 0 | 6 | 9 | 8 | 83.3% | 55.6% | 62.5% |

| 6 | 1 | 2 | 3 | 1 | 1 | 3 | 8 | 9 | 12 | 75.0% | 66.7% | 50.0% |

| 7 | 1 | 2 | 3 | 0 | 0 | 2 | 8 | 9 | 12 | 87.5% | 77.8% | 58.3% |

| 8 | 1 | 2 | 3 | 1 | 2 | 1 | 10 | 12 | 12 | 80.0% | 66.7% | 66.7% |

| 9 | 1 | 2 | 3 | 0 | 1 | 0 | 10 | 12 | 12 | 90.0% | 75.0% | 75.0% |

| 10 | 1 | 2 | 3 | 1 | 0 | 3 | 12 | 12 | 16 | 83.3% | 83.3% | 62.5% |

| 11 | 1 | 2 | 3 | 0 | 2 | 2 | 12 | 15 | 16 | 91.7% | 73.3% | 68.8% |

| 12 | 1 | 2 | 3 | 1 | 1 | 1 | 14 | 15 | 16 | 85.7% | 80.0% | 75.0% |

| 13 | 1 | 2 | 3 | 0 | 0 | 0 | 14 | 15 | 16 | 92.9% | 86.7% | 81.3% |

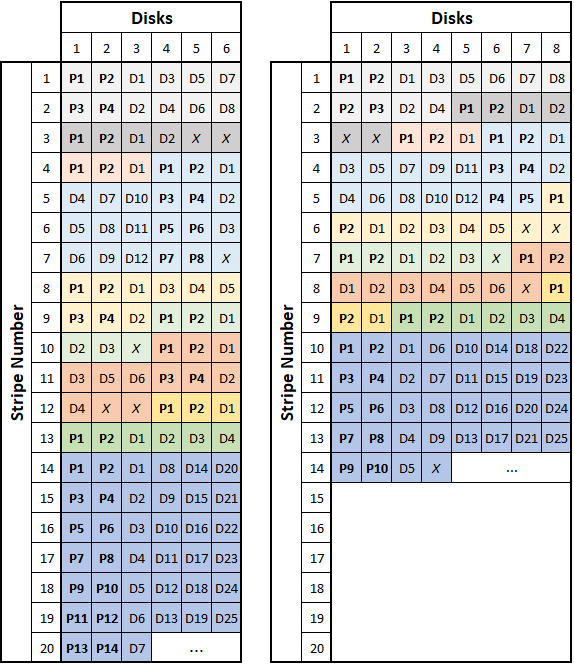

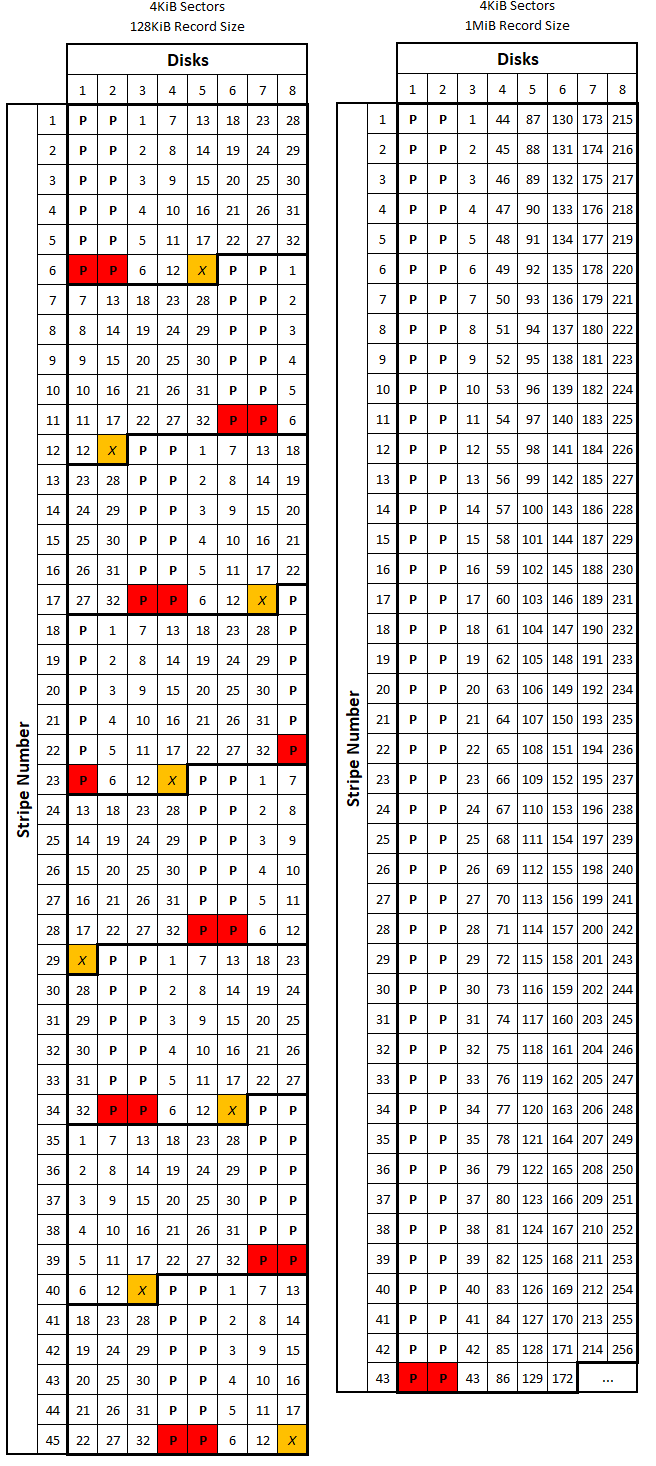

If the data you’re writing fits in a single stripe, ZFS will allocate the block based on the above table, again making sure that the block size is a factor of \(n_{min}\). When the data you’re writing doesn’t fit in a single stripe, ZFS simply stripes the data across all the disks in the array (again, in the vertical orientation discussed above) making sure that there is an appropriate quantity of parity sectors per stripe. It will still make sure the size of the block (which is now spread across multiple stripes and contains multiple sets of parity sectors) is a factor of \(n_{min}\) to avoid the situation outlined above. When considering how ZFS stripes its data, remember that RAID-Z can handle partial stripe writes. This means that RAID-Z parity information is associated with each block rather than with each stripe; thus it is possible to have multiple sets of parity sectors on a given disk stripe if there are multiple blocks per stripe. The below figures show (roughly) how ZFS might store several data blocks of varying sizes on a 6-wide and an 8-wide RAID-Z2 array. Data sectors are preceded by a "D", parity sectors by a "P", and padding sectors are indicated by an "X". Each set of colored squares represents a different ZFS block.

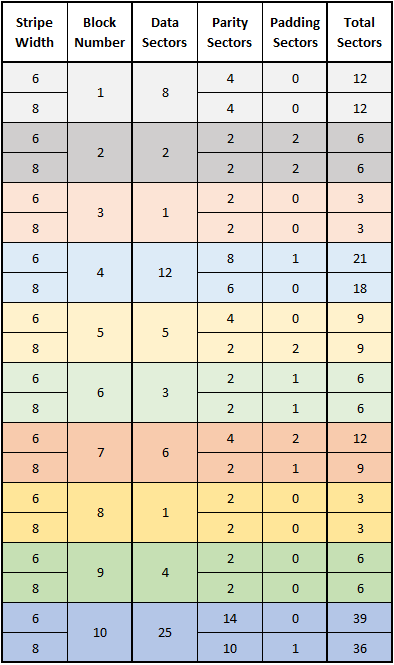

If we define \(w\) as the stripe width (or the number of disks in the array), we can see that ZFS will make sure there are \(p\) parity sectors for every set of \(1\) to \(w-p\) data sectors so a disk failure doesn’t compromise the stripe. In other words, if there are between \(1\) and \(w-p\) user data sectors, the ZFS block will have \(p\) total parity sectors. If there are between \((w-p)+1\) and \(2(w-p)\) user data sectors, the block will have \(2p\) total parity sectors. This point can be tough to conceptualize, but if you study all the examples in the above figure, you should see what I mean by this. It is also interesting to compare the number of total sectors that are required to store a given number of data sectors for the 6-wide and 8-wide RAID-Z2 examples. The table below shows this comparison.

Note first that all the numbers in the “Total Sectors” column are divisible by

$$ n_{min} = 1+p = 1+2 = 3 $$

This is due to the “padding” sectors allocated at the end of the blocks so their lengths are divisible by \(n_{min}\). Because of this, the sequence in which these blocks are stored is irrelevant when we determine how many total sectors will be required to store that data. Comparing the values in the two “Total” columns (particularly for the larger data blocks) hint at the next form of overhead we will cover.

To review, ZFS dynamically sizes data blocks based on the amount of user data and parity in that block. The smallest block size is

$$ n_{min} = 1+p $$

Where \(p\) is the parity level. The blocks can grow in increments of \(n_{min}\). We also defined \(w\) as the stripe width. Next, we’ll look at how larger writes are handled (for files of a couple MiB and larger).

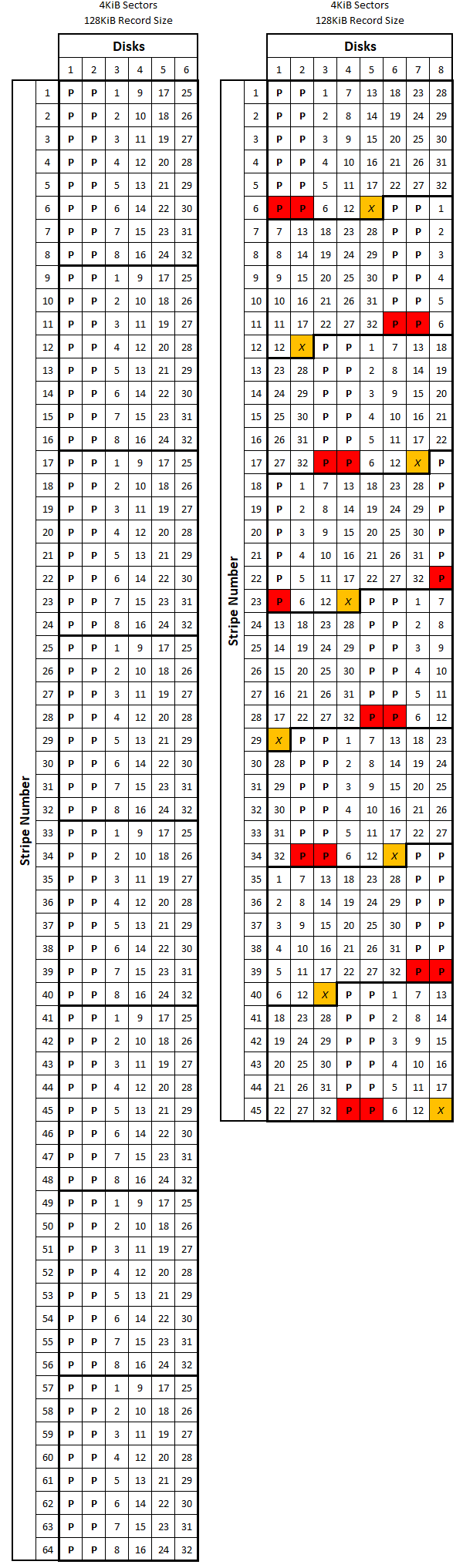

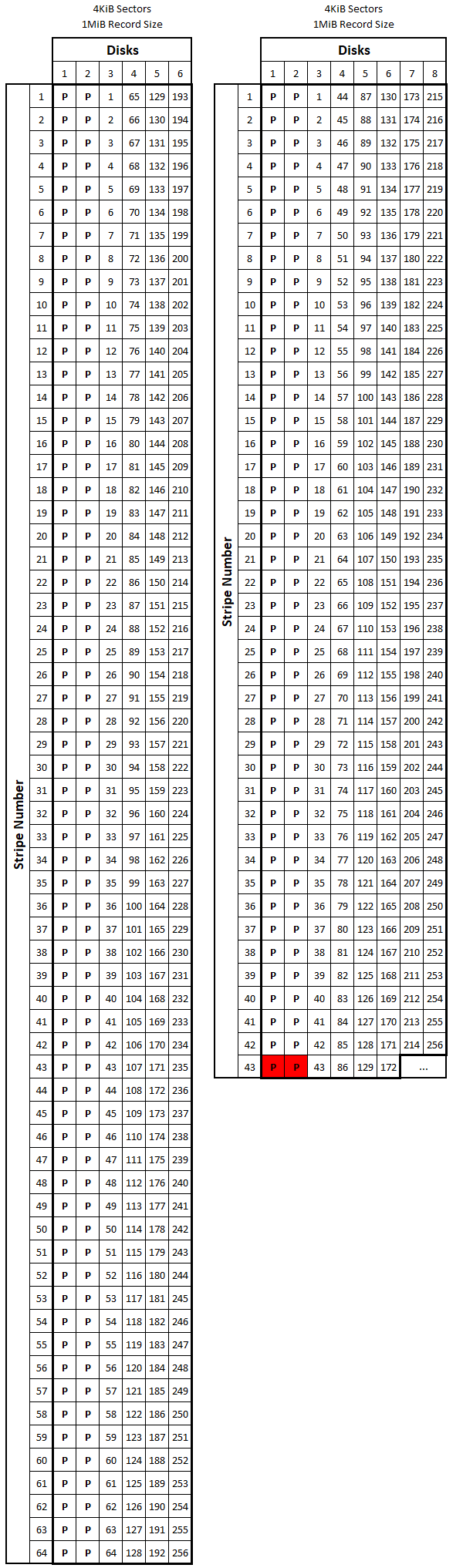

The maximum size of a ZFS data block size is controlled by a user-definable parameter called recordsize. Its value represents the maximum amount of data (before parity and padding) that a file system block can contain. The default value for the recordsize parameter in a FreeNAS is 128KiB, but you can set the value to any power of 2 between 512b and 1MiB. The recordsize parameter can be set per ZFS dataset and even modified after the dataset is created (but will only affect data written after the parameter is changed). You may realize at this point that blocks of length recordsize might not always contain a total number of sectors that is divisible by \(n_{min}\)... We’ll get to this in just a bit.

We now have all four parameters we need to consider when calculating the allocation overhead of a ZFS array: A ZFS block’s recordsize, the vdev’s parity level (\(p\)), the vdev’s stripe width (\(w\)), and disks’ sector size (ashift). The allocation overhead will be calculated as a percentage of the total volume size so it is independent of individual disk size. To help us understand how all of these factors fit together, I will focus on 4 different examples. We will go through the math to calculate allocation overhead (defined below) for each example, then look at them all visually. The four examples are as follows:

| Ex. Num | Parity Level | Stripe Width | recordsize | sector size (ashift) |

|---|---|---|---|---|

| 1 | 2 (RAID-Z2) | 6 | 128KiB |

4KiB (12) |

| 2 | 2 (RAID-Z2) | 8 | 128KiB |

4KiB (12) |

| 3 | 2 (RAID-Z2) | 6 | 1MiB |

4KiB (12) |

| 4 | 2 (RAID-Z2) | 8 | 1MiB |

4KiB (12) |

As we will see later on, the parity level, stripe width, and ashift values are typically held constant while the recordsize value can be tuned to suit the application and maximize the storage efficiency by minimizing allocation overhead. If the parity level and stripe width are not held constant, decreasing parity level and/or increasing stripe width will always increase overall storage efficiency (more on this below). The ashift parameter should not be adjusted unless ZFS incorrectly computed its value.

For the first example, we’ll look at a 6-wide RAID-Z2 array with 4KiB sectors and a recordsize of 128KiB. 128KiB of data represents 128KiB/4KiB = 32 total sectors worth of user data. Since we’re using RAID-Z2, we need 2 parity sectors per stripe, leaving 6-2 = 4 sectors per stripe for user data. 32 total sectors divided by 4 user data sectors per stripe gives us 8 total stripes. 8 stripes * 2 parity sectors per stripe gives us 16 total parity sectors. 16 parity sectors + 32 data sectors give us 48 total sectors, which is divisible by 3, so no padding sectors are needed. In this example, you will notice that all the numbers divided into each other nicely. Unfortunately, this is not always the case in every configuration.